We provide real AI-100 exam questions and answers braindumps in two formats. Download PDF & Practice Tests. Pass Microsoft AI-100 Exam quickly & easily. The AI-100 PDF type is available for reading and printing. You can print more and practice many times. With the help of our Microsoft AI-100 dumps pdf and vce product and material, you can easily pass the AI-100 exam.

Check AI-100 free dumps before getting the full version:

NEW QUESTION 1

You create an Azure Cognitive Services resource.

You develop needs to be able to retrieve the keys used by the resource. The solution must use the principle of least privilege.

What is the best role to assign to the developer? More than one answer choice may achieve the goal.

- A. Security Manager

- B. Security Reader

- C. Cognitive Services Contributor

- D. Cognitive Services User

Answer: D

Explanation:

The Cognitive Services User lets you read and list keys of Cognitive Services. References:

https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles

NEW QUESTION 2

You are designing an AI solution that will analyze millions of pictures.

You need to recommend a solution for storing the pictures. The solution must minimize costs. Which storage solution should you recommend?

- A. an Azure Data Lake store

- B. Azure File Storage

- C. Azure Blob storage

- D. Azure Table storage

Answer: C

Explanation:

Data Lake will be a bit more expensive although they are in close range of each other. Blob storage has more options for pricing depending upon things like how frequently you need to access your data (cold vs hot storage).

References:

http://blog.pragmaticworks.com/azure-data-lake-vs-azure-blob-storage-in-data-warehousing

NEW QUESTION 3

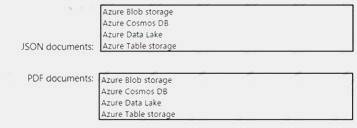

You need to build a sentiment analysis solution that will use input data from JSON documents and PDF documents. The JSON documents must be processed in batches and aggregated.

Which storage type should you use for each file type? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/big-data/batch-processing

NEW QUESTION 4

You need to design an application that will analyze real-time data from financial feeds. The data will be ingested into Azure loT Hub. The data must be processed as quickly as possible in the order in which it is ingested.

Which service should you include in the design?

- A. Azure Event Hubs

- B. Azure Data Factory

- C. Azure Stream Analytics

- D. Apache Kafka

Answer: D

NEW QUESTION 5

Your company has 1,000 AI developers who are responsible for provisioning environments in Azure. You need to control the type, size, and location of the resources that the developers can provision. What should you use?

- A. Azure Key Vault

- B. Azure service principals

- C. Azure managed identities

- D. Azure Security Center

- E. Azure Policy

Answer: B

Explanation:

When an application needs access to deploy or configure resources through Azure Resource Manager in

Azure Stack, you create a service principal, which is a credential for your application. You can then delegate only the necessary permissions to that service principal.

References:

https://docs.microsoft.com/en-us/azure/azure-stack/azure-stack-create-service-principals

NEW QUESTION 6

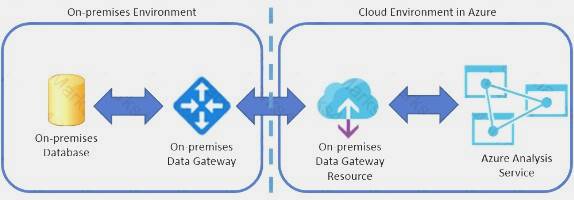

You are building an Azure Analysis Services cube for your Al deployment.

The source data for the cube is located in an on premises network in a Microsoft SQL Server database. You need to ensure that the Azure Analysis Services service can access the source data.

What should you deploy to your Azure subscription?

- A. a site-to-site VPN

- B. a network gateway

- C. a data gateway

- D. Azure Data Factory

Answer: C

Explanation:

From April 2021 onward we can use On-premises Data Gateway for Azure Analysis Services. This means you can connect your Tabular Models hosted in Azure Analysis Services to your on-premises data sources through On-premises Data Gateway.

References:

https://biinsight.com/on-premises-data-gateway-for-azure-analysis-services/

NEW QUESTION 7

Your company plans to implement an Al solution that will analyse data from loT devices.

Data from the devices will be analysed in real time. The results of the analysis will be stored in a SQL database.

You need to recommend a data processing solution that uses the Transact-SQL language. Which data processing solution should you recommend?

- A. Azure Stream Analytics

- B. SQL Server Integration Services (SSIS)

- C. Azure Event Hubs

- D. Azure Machine Learning

Answer: A

Explanation:

References:

https://www.linkedin.com/pulse/getting-started-azure-iot-services-stream-analytics-rob-tiffany

NEW QUESTION 8

Your company recently purchased several hundred hardware devices that contains sensors.

You need to recommend a solution to process the sensor data. The solution must provide the ability to write back configuration changes to the devices.

What should you include in the recommendation?

- A. Microsoft Azure loT Hub

- B. API apps in Microsoft Azure App Service

- C. Microsoft Azure Event Hubs

- D. Microsoft Azure Notification Hubs

Answer: A

Explanation:

References:

https://azure.microsoft.com/en-us/resources/samples/functions-js-iot-hub-processing/

NEW QUESTION 9

You need to design the Butler chatbot solution to meet the technical requirements.

What is the best channel and pricing tier to use? More than one answer choice may achieve the goal Select the BEST answer.

- A. standard channels that use the S1 pricing tier

- B. standard channels that use the Free pricing tier

- C. premium channels that use the Free pricing tier

- D. premium channels that use the S1 pricing tier

Answer: D

Explanation:

References:

https://azure.microsoft.com/en-in/pricing/details/bot-service/

NEW QUESTION 10

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You have Azure IoT Edge devices that generate streaming data.

On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy Azure Functions as an IoT Edge module.

Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

Instead use Azure Stream Analytics and REST API.

Note. Available in both the cloud and Azure IoT Edge, Azure Stream Analytics offers built-in machine learning based anomaly detection capabilities that can be used to monitor the two most commonly occurring anomalies: temporary and persistent.

Stream Analytics supports user-defined functions, via REST API, that call out to Azure Machine Learning endpoints.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-machine-learning-anomaly-detection

NEW QUESTION 11

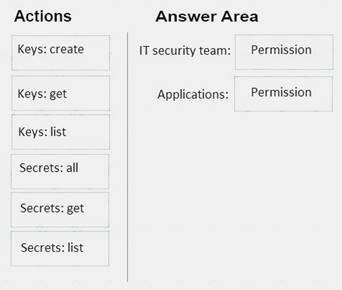

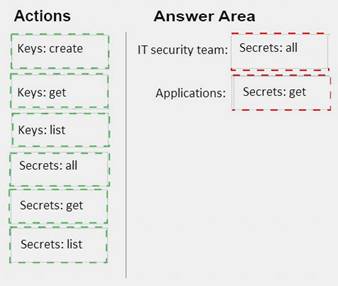

You use an Azure key vault to store credentials for several Azure Machine Learning applications. You need to configure the key vault to meet the following requirements: Ensure that the IT security team can add new passwords and periodically change the passwords.

Ensure that the IT security team can add new passwords and periodically change the passwords.  Ensure that the applications can securely retrieve the passwords for the applications.

Ensure that the applications can securely retrieve the passwords for the applications. Use the principle of least privilege.

Use the principle of least privilege.

Which permissions should you grant? To answer, drag the appropriate permissions to the correct targets. Each permission may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

NEW QUESTION 12

You design an Al workflow that combines data from multiple data sources for analysis. The data sources are composed of:

• JSON files uploaded to an Azure Storage account

• On-premises Oracle databases

• Azure SQL databases

Which service should you use to ingest the data?

- A. Azure Data Factory

- B. Azure SQL Data Warehouse

- C. Azure Data Lake Storage

- D. Azure Databricks

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-factory/introduction

NEW QUESTION 13

You have thousands of images that contain text.

You need to process the text from the images into a machine-readable character stream. Which Azure Cognitive Services service should you use?

- A. Translator Text

- B. Text Analytics

- C. Computer Vision

- D. the Image Moderation API

Answer: C

Explanation:

With Computer Vision you can detect text in an image using optical character recognition (OCR) and extract the recognized words into a machine-readable character stream.

References:

https://azure.microsoft.com/en-us/services/cognitive-services/computer-vision/ https://docs.microsoft.com/en-us/azure/cognitive-services/content-moderator/image-moderation-api

NEW QUESTION 14

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create several Al models in Azure Machine Learning Studio. You deploy the models to a production environment.

You need to monitor the compute performance of the models. Solution: You create environment files.

Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

You need to enable Model data collection. References:

https://docs.microsoft.com/en-us/azure/machine-learning/service/how-to-enable-data-collection

NEW QUESTION 15

You need to build an A) solution that will be shared between several developers and customers. You plan to write code, host code, and document the runtime all within a single user experience. You build the environment to host the solution.

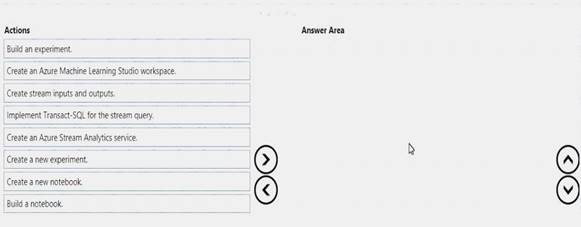

Which three actions should you perform in sequence next? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

Step 1: Create an Azure Machine Learning Studio workspace

Step 2: Create a notebook

You can manage notebooks using the UI, the CLI, and by invoking the Workspace API. To create a notebook Click the Workspace button Workspace Icon or the Home button Home Icon in the sidebar. Do one of the following:

Click the Workspace button Workspace Icon or the Home button Home Icon in the sidebar. Do one of the following:

Next to any folder, click the Menu Dropdown on the right side of the text and select Create > Notebook. Create Notebook

In the Workspace or a user folder, click Down Caret and select Create > Notebook.

2. In the Create Notebook dialog, enter a name and select the notebook’s primary language.

3. If there are running clusters, the Cluster drop-down displays. Select the cluster to attach the notebook to.

4. Click Create.

Step 3: Create a new experiment

Create a new experiment by clicking +NEW at the bottom of the Machine Learning Studio window. Select EXPERIMENT > Blank Experiment.

References:

https://docs.azuredatabricks.net/user-guide/notebooks/notebook-manage.html https://docs.microsoft.com/en-us/azure/machine-learning/service/quickstart-run-cloud-notebook

NEW QUESTION 16

You have an Azure Machine Learning experiment that must comply with GDPR regulations. You need to track compliance of the experiment and store documentation about the experiment. What should you use?

- A. Azure Table storage

- B. Azure Security Center

- C. an Azure Log Analytics workspace

- D. Compliance Manager

Answer: D

Explanation:

References:

https://azure.microsoft.com/en-us/blog/new-capabilities-to-enable-robust-gdpr-compliance/

NEW QUESTION 17

Which two services should be implemented so that Butler can find available rooms based on the technical requirements? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- A. QnA Maker

- B. Bing Entity Search

- C. Language Understanding (LUIS)

- D. Azure Search

- E. Content Moderator

Answer: AC

Explanation:

References:

https://azure.microsoft.com/en-in/services/cognitive-services/language-understanding-intelligent-service/

NEW QUESTION 18

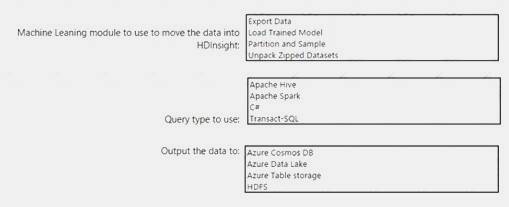

You are designing a solution that will ingest data from an Azure loT Edge device, preprocess the data in Azure Machine Learning, and then move the data to Azure HDInsight for further processing.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

Box 1: Export Data

The Export data to Hive option in the Export Data module in Azure Machine Learning Studio. This option is useful when you are working with very large datasets, and want to save your machine learning experiment data to a Hadoop cluster or HDInsight distributed storage.

Box 2: Apache Hive

Apache Hive is a data warehouse system for Apache Hadoop. Hive enables data summarization, querying, and analysis of data. Hive queries are written in HiveQL, which is a query language similar to SQL.

Box 3: Azure Data Lake

Default storage for the HDFS file system of HDInsight clusters can be associated with either an Azure Storage account or an Azure Data Lake Storage.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/export-to-hive-query https://docs.microsoft.com/en-us/azure/hdinsight/hadoop/hdinsight-use-hive

NEW QUESTION 19

You are designing a solution that will use the Azure Content Moderator service to moderate user-generated content.

You need to moderate custom predefined content without repeatedly scanning the collected content. Which API should you use?

- A. Term List API

- B. Text Moderation API

- C. Image Moderation API

- D. Workflow API

Answer: A

Explanation:

The default global list of terms in Azure Content Moderator is sufficient for most content moderation needs. However, you might need to screen for terms that are specific to your organization. For example, you might

want to tag competitor names for further review.

Use the List Management API to create custom lists of terms to use with the Text Moderation API. The Text - Screen operation scans your text for profanity, and also compares text against custom and shared blacklists.

NEW QUESTION 20

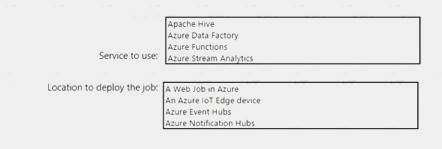

You are designing a solution that will ingest temperature data from loT devices, calculate the average temperature, and then take action based on the aggregated data. The solution must meet the following requirements:

•Minimize the amount of uploaded data.

• Take action based on the aggregated data as quickly as possible.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

Box 1: Azure Functions

Azure Function is a (serverless) service to host functions (little piece of code) that can be used for e. g. event driven applications.

General rule is always difficult since everything depends on your requirement but if you have to analyze a data stream, you should take a look at Azure Stream Analytics and if you want to implement something like a serverless event driven or timer-based application, you should check Azure Function or Logic Apps.

Note: Azure IoT Edge allows you to deploy complex event processing, machine learning, image recognition, and other high value AI without writing it in-house. Azure services like Azure Functions, Azure Stream Analytics, and Azure Machine Learning can all be run on-premises via Azure IoT Edge.

Box 2: An Azure IoT Edge device

Azure IoT Edge moves cloud analytics and custom business logic to devices so that your organization can focus on business insights instead of data management.

References:

https://docs.microsoft.com/en-us/azure/iot-edge/about-iot-edge

NEW QUESTION 21

You are developing a Computer Vision application.

You plan to use a workflow that will load data from an on-premises database to Azure Blob storage, and then connect to an Azure Machine Learning service.

What should you use to orchestrate the workflow?

- A. Azure Kubernetes Service (AKS)

- B. Azure Pipelines

- C. Azure Data Factory

- D. Azure Container Instances

Answer: C

Explanation:

With Azure Data Factory you can use workflows to orchestrate data integration and data transformation processes at scale.

Build data integration, and easily transform and integrate big data processing and machine learning with the

visual interface. References:

https://azure.microsoft.com/en-us/services/data-factory/

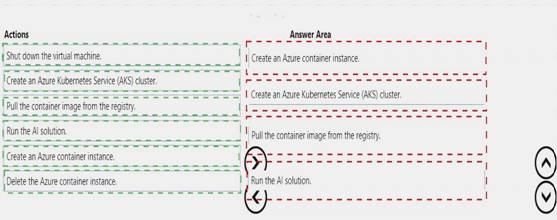

NEW QUESTION 22

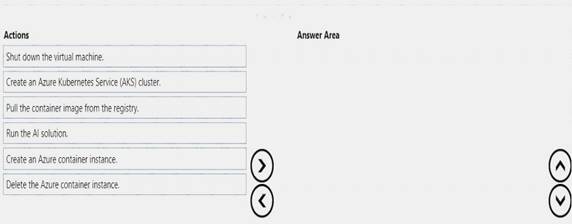

You have a container image that contains an Al solution. The solution will be used on demand and will only be needed a few hours each month.

You plan to use Azure Functions to deploy the environment on-demand.

You need to recommend the deployment process. The solution must minimize costs.

Which four actions should you recommend Azure Functions perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

NEW QUESTION 23

......

P.S. Easily pass AI-100 Exam with 101 Q&As Surepassexam Dumps & pdf Version, Welcome to Download the Newest Surepassexam AI-100 Dumps: https://www.surepassexam.com/AI-100-exam-dumps.html (101 New Questions)