Act now and download your Amazon-Web-Services DOP-C01 test today! Do not waste time for the worthless Amazon-Web-Services DOP-C01 tutorials. Download Improve Amazon-Web-Services AWS Certified DevOps Engineer- Professional exam with real questions and answers and begin to learn Amazon-Web-Services DOP-C01 with a classic professional.

Free DOP-C01 Demo Online For Amazon-Web-Services Certifitcation:

NEW QUESTION 1

Which of the following are ways to ensure that data is secured while in transit when using the AWS Elastic load balancer. Choose 2 answers from the options given below

- A. Usea TCP front end listener for your ELB

- B. Usean SSL front end listenerforyourELB

- C. Usean HTTP front end listener for your ELB

- D. Usean HTTPS front end listener for your ELB

Answer: BD

Explanation:

The AWS documentation mentions the following

You can create a load balancer that uses the SSL/TLS protocol for encrypted connections (also known as SSL offload). This feature enables traffic encryption between your load balancer and the clients that initiate HTTPS sessions, and for connections between your load balancer and your L~C2 instances.

For more information on Elastic Load balancer and secure listeners, please refer to the below link: http://docs.aws.amazon.com/elasticloadbalancing/latest/classic/elb-https-load-balancers.html

NEW QUESTION 2

You have an AWS OpsWorks Stack running Chef Version 11.10. Your company hosts its own proprietary cookbook on Amazon S3, and this is specified as a custom cookbook in the stack. You want to use an open-source cookbook located in an external Git repository. What tasks should you perform to enable the use of both custom cookbooks?

- A. Inthe AWS OpsWorks stack settings, enable Berkshel

- B. Create a new cookbook with aBerksfile that specifies the other two cookbook

- C. Configure the stack to usethis new cookbook.

- D. Inthe OpsWorks stack settings add the open source project's cookbook details inaddition to your cookbook.

- E. Contactthe open source project's maintainers and request that they pull your cookbookinto their

- F. Update the stack to use their cookbook.

- G. Inyour cookbook create an S3 symlink object that points to the open sourceproject's cookbook.

Answer: A

Explanation:

To use an external cookbook on an instance, you need a way to install it and manage any dependencies. The preferred approach is to implement a cookbook that supports a dependency manager named Berkshelf. Berkshelf works on Amazon CC2 instances, including AWS OpsWorks Stacks instances, but it is also designed to work with Test Kitchen and Vagrant.

For more information on Opswork and Berkshelf, please visit the link:

• http://docs.aws.a mazon.com/opsworks/latest/userguide/cookbooks-101 -opsworks- berkshelf.htm I

NEW QUESTION 3

You are designing an application that contains protected health information. Security and compliance requirements for your application mandate that all protected health information in the application use encryption at rest and in transit. The application uses a three-tier architecture where data flows through the load balancer and is stored on Amazon EBS volumes for processing and the results are stored in Amazon S3 using the AWS SDK.

Which of the following two options satisfy the security requirements? (Select two)

- A. UseSSL termination on the load balancer, Amazon EBS encryption on Amazon EC2instances and Amazon S3 with server- side encryption.

- B. UseSSL termination with a SAN SSL certificate on the load balance

- C. Amazon EC2with all Amazon EBS volumes using Amazon EBS encryption, and Amazon S3 withserver-side encryption with customer-managed keys.

- D. UseTCP load balancing on the load balance

- E. SSL termination on the Amazon EC2instance

- F. OS- level disk encryption on the Amazon EBS volumes and Amazon S3with server-side encryption.

- G. UseTCP load balancing on the load balance

- H. SSL termination on the Amazon EC2instances and Amazon S3 with server-side encryption.

- I. UseSSL termination on the load balancer an SSL listener on the Amazon EC2instances, Amazon EBS encryption on EBS volumes containing PHI and Amazon S3with server-side encryption.

Answer: CE

Explanation:

The AWS Documentation mentions the following: HTTPS/SSL Listeners

You can create a load balancer with the following security features. SSL Server Certificates

If you use HTTPS or SSL for your front-end connections, you must deploy an X.509 certificate (SSL server certificate) on your load balancer. The load balancer decrypts

requests from clients before sending them to the back-end instances (known as SSL termination). For more information, see SSL/TLS Certificates for Classic Load Balancers.

If you don't want the load balancer to handle the SSL termination (known as SSL offloading), you can use TCP for both the front-end and back-end connections, and deploy certificates on the registered instances handling requests.

Reference Link:

◆ http://docs.aws.a mazon.com/elasticloadbalancing/latest/classic/el b-listener-config.htm I

Create a Classic Load Balancer with an HTTPS Listener

A load balancer takes requests from clients and distributes them across the EC2 instances that are registered with the load balancer.

You can create a toad balancer that listens on both the HTTP (80) and HTTPS (443) ports. If you specify that the HTTPS listener sends requests to the instances on port 80, the load balancer terminates the requests and communication from the load balancer to the instances is not encrypted. If the HTTPS listener sends requests to the instances on port 443, communication from the load balancer to the instances is encrypted.

Reference Link:

• http://docs.aws.a mazon.com/elasticloadbalancing/latest/classic/el b-create-https-ssl-load- balancer.htm I Option A & B are incorrect because they are missing encryption in transit between ELB and EC2 instances.

Option D is incorrect because it is missing encryption at rest on the data associated with the EC2 instances.

NEW QUESTION 4

Which of the below 3 things can you achieve with the Cloudwatch logs service? Choose 3 options.

- A. RecordAPI calls for your AWS account and delivers log files containing API calls toyour Amazon S3 bucket

- B. Sendthe log data to AWS Lambda for custom processing or to load into other systems

- C. Streamthe log data to Amazon Kinesis

- D. Streamthe log data into Amazon Elasticsearch in near real-time with Cloud Watch Logssubscriptions.

Answer: BCD

Explanation:

You can use Amazon CloudWatch Logs to monitor, store, and access your log files from Amazon Elastic Compute Cloud (Amazon L~C2) instances, AWS CloudTrail, and other sources. You can then retrieve the associated log data from CloudWatch Logs.

For more information on Cloudwatch logs, please visit the below URL http://docs.ws.amazon.com/AmazonCloudWatch/latest/logs/WhatlsCloudWatchLogs.html

NEW QUESTION 5

An application is currently writing a large number of records to a DynamoDB table in one region. There is a requirement for a secondary application tojust take in the changes to the DynamoDB table every 2 hours and process the updates accordingly. Which of the following is an ideal way to ensure the secondary application can get the relevant changes from the DynamoDB table.

- A. Inserta timestamp for each record and then scan the entire table for the timestamp asper the last 2 hours.

- B. Createanother DynamoDB table with the records modified in the last 2 hours.

- C. UseDynamoDB streams to monitor the changes in the DynamoDB table.

- D. Transferthe records to S3 which were modified in the last 2 hours

Answer: C

Explanation:

The AWS Documentation mentions the following

A DynamoDB stream is an ordered flow of information about changes to items in an Amazon DynamoDB table. When you enable a stream on a table, DynamoDB captures information about every modification to data items in the table.

Whenever an application creates, updates, or deletes items in the table, DynamoDB Streams writes a stream record with the primary key attribute(s) of the items that were modified. Astream record contains information about a data modification to a single item in a DynamoDB table. You can configure the stream so that the stream records capture additional information, such as the "before" and "after" images of modified items.

For more information on DynamoDB streams, please visit the below URL: http://docs.aws.amazon.com/amazondynamodb/latest/developerguide/Streams.html

NEW QUESTION 6

You are responsible for an application that leverages the Amazon SDK and Amazon EC2 roles for storing and retrieving data from Amazon S3, accessing multiple DynamoDB tables, and exchanging message with Amazon SQS queues. Your VP of Compliance is concerned that you are not following security best practices for securing all of this access. He has asked you to verify that the application's AWS access keys are not older than six months and to provide control evidence that these keys will be rotated a minimum of once every six months.

Which option will provide your VP with the requested information?

- A. Createa script to query the 1AM list-access keys API to get your application accesskey creation date and create a batch process to periodically create acompliance report for your VP.

- B. Provideyour VP with a link to 1AM AWS documentation to address the VP's key rotationconcerns.

- C. Updateyour application to log changes to its AWS access key credential file and use aperiodic Amazon EMR job to create a compliance report for your VP

- D. Createa new set of instructions for your configuration management tool that willperiodically create and rotate the application's existing access keys andprovide a compliance report to your VP.

Answer: B

Explanation:

The question is focusing on 1AM roles rather than using access keys for accessing the services, AWS will take care of the temporary credentials provided through the roles in accessing these services.

NEW QUESTION 7

You have a requirement to host a cluster of NoSQL databases. There is an expectation that there will be a lot of I/O on these databases. Which EBS volume type is best for high performance NoSQL cluster deployments?

- A. io1

- B. gp1

- C. standard

- D. gp2

Answer: A

Explanation:

Provisioned IOPS SSD should be used for critical business applications that require sustained IOPS performance, or more than 10,000 IOPS or 160 MiB/s of throughput per volume

This is ideal for Large database workloads, such as:

• MongoDB

• Cassandra

• MicrosoftSQL Server

• MySQL

• PostgreSQL

• Oracle

For more information on the various CBS Volume Types, please refer to the below link:

• http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/ CBSVolumeTvpes.html

NEW QUESTION 8

A gaming company adopted AWS Cloud Formation to automate load-testing of theirgames. They have created an AWS Cloud Formation template for each gaming environment and one for the load- testing stack. The load-testing stack creates an Amazon Relational Database Service (RDS) Postgres database and two web servers running on Amazon Elastic Compute Cloud (EC2) that send HTTP requests, measure response times, and write the results into the database. A test run usually takes between 15 and 30 minutes. Once the tests are done, the AWS Cloud Formation stacks are torn down immediately. The test results written to the Amazon RDS database must remain accessible for visualization and analysis.

Select possible solutions that allow access to the test results after the AWS Cloud Formation load - testing stack is deleted.

Choose 2 answers.

- A. Define an Amazon RDS Read-Replica in theload-testing AWS Cloud Formation stack and define a dependency relation betweenmaster and replica via the Depends On attribute.

- B. Define an update policy to prevent deletionof the Amazon RDS database after the AWS Cloud Formation stack is deleted.

- C. Define a deletion policy of type Retain forthe Amazon RDS resource to assure that the RDS database is not deleted with theAWS Cloud Formation stack.

- D. Define a deletion policy of type Snapshotfor the Amazon RDS resource to assure that the RDS database can be restoredafter the AWS Cloud Formation stack is deleted.

- E. Defineautomated backups with a backup retention period of 30 days for the Amazon RDSdatabase and perform point-in-time recovery of the database after the AWS CloudFormation stack is deleted.

Answer: CD

Explanation:

With the Deletion Policy attribute you can preserve or (in some cases) backup a resource when its stack is deleted. You specify a DeletionPolicy attribute for each resource that you want to control. If a resource has no DeletionPolicy attribute, AWS Cloud Formation deletes the resource by default.

To keep a resource when its stack is deleted, specify Retain for that resource. You can use retain for any resource. For example, you can retain a nested stack, S3 bucket, or CC2 instance so that you can continue to use or modify those resources after you delete their stacks.

For more information on Deletion policy, please visit the below url http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/aws-attri bute- deletionpolicy.html

NEW QUESTION 9

There is a requirement to monitor API calls against your AWS account by different users and entities. There needs to be a history of those calls. The history of those calls are needed in in bulk for later review. Which 2 services can be used in this scenario

- A. AWS Config; AWS Inspector

- B. AWS CloudTrail; AWS Config

- C. AWS CloudTrail; CloudWatch Events

- D. AWS Config; AWS Lambda

Answer: C

Explanation:

You can use AWS CloudTrail to get a history of AWS API calls and related events for your account. This history includes calls made with the AWS Management

Console, AWS Command Line Interface, AWS SDKs, and other AWS services. For more information on Cloudtrail, please visit the below URL:

• http://docs.aws.a mazon.com/awscloudtrail/latest/userguide/cloudtrai l-user-guide.html

Amazon Cloud Watch Cvents delivers a near real-time stream of system events that describe changes in Amazon Web Services (AWS) resources. Using simple rules that you can quickly set up, you can match events and route them to one or more target functions or streams. Cloud Watch Cvents becomes aware of operational changes as they occur. Cloud Watch Cvents responds to these operational changes and takes corrective action as necessary, by sending messages to respond to the environment, activating functions, making changes, and capturing state information. For more information on Cloud watch events, please visit the below U RL:

• http://docs.aws.a mazon.com/AmazonCloudWatch/latest/events/Whatl sCloudWatchCvents.html

NEW QUESTION 10

There is a requirement for an application hosted on a VPC to access the On-premise LDAP server. The VPC and the On-premise location are connected via an I PSec VPN. Which of the below are the right options for the application to authenticate each user. Choose 2 answers from the options below

- A. Develop an identity broker that authenticates against 1AM security Token service to assume a 1AM role in order to get temporary AWS security credentials The application calls the identity broker to get AWS temporary security credentials.

- B. The application authenticates against LDAP and retrieves the name of an 1AM role associated with the use

- C. The application then calls the 1AM Security Token Service to assume that 1AM rol

- D. The application can use the temporary credentials to access any AWS resources.

- E. Develop an identity broker that authenticates against LDAP and then calls 1AM Security Token Service to get 1AM federated user credential

- F. The application calls the identity broker to get 1AM federated user credentials with access to the appropriate AWS service.

- G. The application authenticates against LDAP the application then calls the AWS identity and Access Management (1AM) Security service to log in to 1AM using the LDAP credentials the application can use the 1AM temporary credentials to access the appropriate AWS service.

Answer: BC

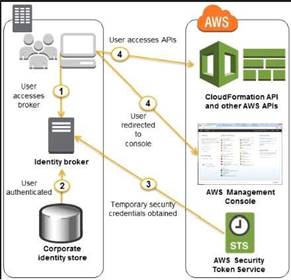

Explanation:

When you have the need for an in-premise environment to work with a cloud environment, you would normally have 2 artefacts for authentication purposes

• An identity store - So this is the on-premise store such as Active Directory which stores all the information for the user's and the groups they below to.

• An identity broker - This is used as an intermediate agent between the on-premise location and the cloud environment. In Windows you have a system known as Active Directory Federation services to provide this facility.

Hence in the above case, you need to have an identity broker which can work with the identity store and the Security Token service in aws. An example diagram of how this works from the aws documentation is given below.

For more information on federated access, please visit the below link: http://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_common-scenarios_federated- users.htm I

NEW QUESTION 11

You are hired as the new head of operations for a SaaS company. Your CTO has asked you to make debugging any part of your entire operation simpler and as fast as possible. She complains that she has no idea what is going on in the complex, service-oriented architecture, because the developers just log to disk, and it's very hard to find errors in logs on so many services. How can you best meet this requirement and satisfy your CTO?

- A. Copy all log files into AWS S3 using a cron job on each instanc

- B. Use an S3 Notification Configuration on the PutBucket event and publish events to AWS Lambd

- C. Use the Lambda to analyze logs as soon as they come in and flag issues.

- D. Begin using CloudWatch Logs on every servic

- E. Stream all Log Groups into S3 object

- F. Use AWS EMR clusterjobs to perform adhoc MapReduce analysis and write new queries when needed.

- G. Copy all log files into AWS S3 using a cron job on each instanc

- H. Use an S3 Notification Configuration on the PutBucket event and publish events to AWS Kinesi

- I. Use Apache Spark on AWS EMR to perform at-scale stream processing queries on the log chunks and flag issues.

- J. Begin using CloudWatch Logs on every servic

- K. Stream all Log Groups into an AWS Elastic search Service Domain running Kibana 4 and perform log analysis on a search cluster.

Answer: D

Explanation:

Amazon Dasticsearch Service makes it easy to deploy, operate, and scale dasticsearch for log analytics, full text search, application monitoring, and more. Amazon

Oasticsearch Service is a fully managed service that delivers Dasticsearch's easy-to-use APIs and real- time capabilities along with the availability, scalability, and security required by production workloads. The service offers built-in integrations with Kibana, Logstash, and AWS services including Amazon Kinesis Firehose, AWS Lambda, and Amazon Cloud Watch so that you can go from raw data to actionable insights quickly. For more information on Elastic Search, please refer to the below link:

• https://aws.amazon.com/elasticsearch-service/

NEW QUESTION 12

Your development team is developing a mobile application that access resources in AWS. The users accessing this application will be logging in via Facebook and Google. Which of the following AWS mechanisms would you use to authenticate users for the application that needs to access AWS resou rces

- A. Useseparate 1AM users that correspond to each Facebook and Google user

- B. Useseparate 1AM Roles that correspond to each Facebook and Google user

- C. UseWeb identity federation to authenticate the users

- D. UseAWS Policies to authenticate the users

Answer: C

Explanation:

The AWS documentation mentions the following

You can directly configure individual identity providers to access AWS resources using web identity federation. AWS currently supports authenticating users using web identity federation through several identity providers: Login with Amazon

Facebook Login

Google Sign-in For more information on Web identity federation please visit the below URL:

• http://docs.aws.amazon.com/sdk-for-javascript/v2/developer-guide/load ing-browser- credentials-federated-id.htm I

NEW QUESTION 13

You are managing an application that contains Go as the front end, MongoDB for document management and is hosted on a relevant Web server. You pre-bake AMI'S with the latest version of the Web server, then user the User Data section to setup the application. You now have a change to the underlying Operating system version and need to deploy that accordingly. How can this be done

in the easiest way possible.

- A. Createa new EBS Volume with the relevant OS patches and attach it to the EC2lnstance.

- B. Createa Cloudformation stack with the new AMI and then deploy the applicationaccordingly.

- C. Createa new pre-baked AM I with the new OS and use the User Data seciton to deploy theapplication.

- D. Createan Opswork stack with the new AMI and then deploy the application accordingly.

Answer: C

Explanation:

The best way in this scenario is to continue the same deployment process which was being used and create a new AMI and then use the User Data section to deploy the application.

For more information on AWS AMI's please see the below link:

• http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/AMIs.htmls

NEW QUESTION 14

You work at a company that makes use of AWS resources. One of the key security policies is to ensure that all data is encrypted both at rest and in transit. Which of the following is not a right implementation which aligns to this policy?

- A. UsingS3 Server Side Encryption (SSE) to store the information

- B. Enable SSLtermination on the ELB C EnablingProxy ProtocolD- Enablingsticky sessions on your load balancer

Answer: B

Explanation:

Please note the keyword "NOT" in the question.

Option A is incorrect. Enabling S3 SSE encryption helps the encryption of data at rest in S3.So Option A is invalid.

Option B is correct. If you disable SSL termination on the ELB the traffic will be encrypted all the way to the backend. SSL termination allows encrypted traffic between the client

and the ELB but cause traffic to be unencrypted between the ELB and the backend (presumably EC2 or ECS/Task, etc.)

If SSL is not terminated on the ELB you must use Layer A to have traffic encrypted all the way.

Sticky sessions are not supported with Layer A (TCP endpoint). Thus option D" Enabling sticky sessions on your load balancer" can't be used and is the right answer

For more information on sticky sessions, please visit the below URL https://docs.aws.amazon.com/elasticloadbalancing/latest/classic/elb-sticky-sessions.html Requirements

• An HTTP/HTTPS load balancer.

• At least one healthy instance in each Availability Zone.

• At least one healthy instance in each Availability Zone.

If you don't want the load balancer to handle the SSL termination (known as SSL offloading), you can use TCP for both the front-end and back-end connections, and deploy certificates on the registered instances handling requests.

For more information on elb-listener-config, please visit the below

• https://docs.awsamazon.com/elasticloadbalancing/latest/classic/elb-listener-config.html If the front-end connection uses TCP or SSL, then your back-end connections can use either TCP or SSL. Note: You can use an HTTPS listener and still use SSL on the backend but the ELB must terminate, decrypt and re-encrypt. This is slower and less secure then using the same encryption all the way to the backend.. It also breaks the question requirement of having all data encrypted in transit since it force the ELB to decrypt Proxy protocol is used to provide a secure transport connection hence Option C is also incorrect. For more information on SSL Listeners for your load balancer, please visit the below URL

http://docsaws.amazon.com/elasticloadbalancing/latest/classic/elb-https-load-balancers.html

https://aws.amazon.com/blogs/aws/elastic-load-balancer-support-for-ssl-termination/

NEW QUESTION 15

You want to pass queue messages that are 1GB each. How should you achieve this?

- A. Use Kinesis as a buffer stream for message bodie

- B. Store the checkpoint id for the placement in the Kinesis Stream in SQS.

- C. Use the Amazon SQS Extended Client Library for Java and Amazon S3 as a storage mechanism for message bodies.

- D. Use SQS's support for message partitioning and multi-part uploads on Amazon S3.

- E. Use AWS EFS as a shared pool storage mediu

- F. Store filesystem pointers to the files on disk in the SQS message bodies.

Answer: B

Explanation:

You can manage Amazon SQS messages with Amazon S3. This is especially useful for storing and consuming messages with a message size of up to 2 GB. To manage

Amazon SQS messages with Amazon S3, use the Amazon SQS Extended Client Library for Java. Specifically, you use this library to:

• Specify whether messages are always stored in Amazon S3 or only when a message's size exceeds 256 KB.

• Send a message that references a single message object stored in an Amazon S3 bucket.

• Get the corresponding message object from an Amazon S3 bucket.

• Delete the corresponding message object from an Amazon S3 bucket.

For more information on processing large messages for SQS, please visit the below URL: http://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-s3- messages. html

NEW QUESTION 16

You need to deploy a new application version to production. Because the deployment is high-risk, you need to roll the new version out to users over a number of hours, to make sure everything is working correctly. You need to be able to control the proportion of users seeing the new version of the application down to the percentage point. You use ELB and EC2 with Auto Scaling Groups and custom AMIs with your code pre-installed assigned to Launch Configurations. There are no data base- level changes during your deployment. You have been told you cannot spend too much money, so you must not increase the number of EC2 instances much at all during the deployment, but you also need to be able to switch back to the original version of code quickly if something goes wrong. What is the best way to meet these requirements?

- A. Create a second ELB, Auto Scaling Launch Configuration, and Auto Scaling Group using the Launch Configuratio

- B. Create AMIs with all code pre-installe

- C. Assign the new AMI to the second Auto Scaling Launch Configuratio

- D. Use Route53 Weighted Round Robin Records to adjust the proportion of traffic hitting the two ELBs.S

- E. Use the Blue-Green deployment method to enable the fastest possible rollback if neede

- F. Create a full second stack of instances and cut the DNS over to the new stack of instances, and change the DNS back if a rollback is needed.

- G. Create AMIs with all code pre-installe

- H. Assign the new AMI to the Auto Scaling Launch Configuration, to replace the old on

- I. Gradually terminate instances running the old code (launched with the old Launch Configuration) and allow the new AMIs to boot to adjust the traffic balance to the new cod

- J. On rollback, reverse the process by doing the same thing, but changing the AMI on the Launch Config back to the original code.

- K. Migrate to use AWS Elastic Beanstal

- L. Use the established and well-tested Rolling Deployment setting AWS provides on the new Application Environment, publishing a zip bundle of the new code and adjusting the wait period to spread the deployment over tim

- M. Re-deploy the old code bundle to rollback if needed.

Answer: A

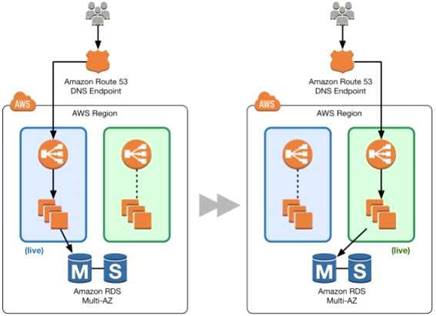

Explanation:

This is an example of a Blue Green Deployment

You can shift traffic all at once or you can do a weighted distribution. With Amazon Route 53, you can define a percentage of traffic to go to the green environment and gradually update the weights until the green environment carries the full production traffic. A weighted distribution provides the ability to perform canary analysis where a small percentage of production traffic is introduced to a new environment. You can test the new code and monitor for errors, limiting the blast radius if any issues are encountered. It also allows the green environment to scale out to support the full production load if you're using Elastic Load Balancing

For more information on Blue Green Deployments, please visit the below URL:

• https://dOawsstatic.com/whitepapers/AWS_Blue_Green_Deployments.pdf

NEW QUESTION 17

As part of your deployment pipeline, you want to enable automated testing of your AWS CloudFormation template. What testing should be performed to enable faster feedback while minimizing costs and risk? Select three answers from the options given below

- A. Usethe AWS CloudFormation Validate Template to validate the syntax of the template

- B. Usethe AWS CloudFormation Validate Template to validate the properties ofresources defined in the template.

- C. Validatethe template's is syntax using a generalJSON parser.

- D. Validatethe AWS CloudFormation template against the official XSD scheme definitionpublished by Amazon Web Services.

- E. Updatethe stack with the templat

- F. If the template fails rollback will return thestack and its resources to exactly the same state.

- G. When creating the stack, specify an Amazon SNS topic to which your testing system is subscribe

- H. Your testing system runs tests when it receives notification that the stack is created or updated.

Answer: AEF

Explanation:

The AWS documentation mentions the following

The aws cloudformation validate-template command is designed to check only the syntax of your template. It does not ensure that the property values that you have specified for a resource are valid for that resource. Nor does it determine the number of resources that will exist when the stack is created.

To check the operational validity, you need to attempt to create the stack. There is no sandbox or test area for AWS Cloud Formation stacks, so you are charged for the resources you create during testing. Option F is needed for notification.

For more information on Cloudformation template validation, please visit the link:

http://docs.aws.a mazon.com/AWSCIoudFormation/latest/UserGuide/using-cfn-va I idate- template.htm I

NEW QUESTION 18

What is the amount of time that Opswork stacks services waits for a response from an underlying instance before deeming it as a failed instance?

- A. Iminute.

- B. 5minutes.

- C. 20minutes.

- D. 60minutes

Answer: B

Explanation:

The AWS Documentation mentions

Every instance has an AWS OpsWorks Stacks agent that communicates regularly with the service. AWS OpsWorks Stacks uses that communication to monitor instance health. If an agent does not communicate with the service for more than approximately five minutes, AWS OpsWorks Stacks considers the instance to have failed.

For more information on the Auto healing feature, please visit the below URL: http://docs.aws.amazon.com/opsworks/latest/userguide/workinginstances-auto healing.htmI

NEW QUESTION 19

The operations team and the development team want a single place to view both operating system and application logs. How should you implement this using A WS services? Choose two from the options below

- A. Using AWS CloudFormation, create a Cloud Watch Logs LogGroup and send the operating system and application logs of interest using the Cloud Watch Logs Agent.

- B. Using AWS CloudFormation and configuration management, set up remote logging to send events via UDP packets to CloudTrail.

- C. Using configuration management, set up remote logging to send events to Amazon Kinesis and insert these into Amazon CloudSearch or Amazon Redshift, depending on available analytic tools.

- D. Using AWS CloudFormation, merge the application logs with the operating system logs, and use 1AM Roles to allow both teams to have access to view console output from Amazon EC2.

Answer: AC

Explanation:

Option B is invalid because Cloudtrail is not designed specifically to take in UDP packets

Option D is invalid because there are already Cloudwatch logs available, so there is no need to have specific logs designed for this.

You can use Amazon CloudWatch Logs to monitor, store, and access your log files from Amazon Elastic Compute Cloud (Amazon L~C2) instances, AWS CloudTrail,

and other sources. You can then retrieve the associated log data from CloudWatch Logs. For more information on Cloudwatch logs please refer to the below link:

http://docs^ws.amazon.com/AmazonCloudWatch/latest/logs/WhatlsCloudWatchLogs.html You can the use Kinesis to process those logs

For more information on Amazon Kinesis please refer to the below link: http://docs.aws.a mazon.com/streams/latest/dev/introduction.html

NEW QUESTION 20

You have a requirement to automate the creation of EBS Snapshots. Which of the following can be

used to achieve this in the best way possible?

- A. Createa powershell script which uses the AWS CLI to get the volumes and then run thescript as a cron job.

- B. Usethe A WSConf ig service to create a snapshot of the AWS Volumes

- C. Usethe AWS CodeDeploy service to create a snapshot of the AWS Volumes

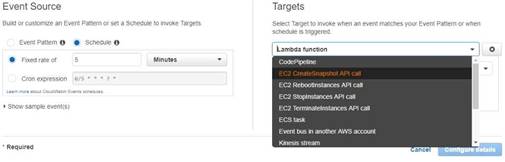

- D. UseCloudwatch Events to trigger the snapshots of EBS Volumes

Answer: D

Explanation:

The best is to use the inbuilt sen/ice from Cloudwatch, as Cloud watch Events to automate the creation of CBS Snapshots. With Option A, you would be restricted to

running the powrshell script on Windows machines and maintaining the script itself And then you have the overhead of having a separate instance just to run that script.

When you go to Cloudwatch events, you can use the Target as EC2 CreateSnapshot API call as shown below.

The AWS Documentation mentions

Amazon Cloud Watch Cvents delivers a near real-time stream of system events that describe changes in Amazon Web Services (AWS) resources. Using simple rules that you can quickly set up, you can match events and route them to one or more target functions or streams. Cloud Watch Cvents becomes aware of operational changes as they occur. Cloud Watch Cvents responds to these operational changes and takes corrective action as necessary, by sending messages to respond to the environment, activating functions, making changes, and capturing state information.

For more information on Cloud watch Cvents, please visit the below U RL:

• http://docs.aws.amazon.com/AmazonCloudWatch/latest/events/WhatlsCloudWatchCvents.htmI

NEW QUESTION 21

Which of the following environment types are available in the Elastic Beanstalk environment. Choose 2 answers from the options given below

- A. Single Instance

- B. Multi-Instance

- C. Load Balancing Autoscaling

- D. SQS, Autoscaling

Answer: AC

Explanation:

The AWS Documentation mentions

In Elastic Beanstalk, you can create a load-balancing, autoscaling environment or a single-instance environment. The type of environment that you require depends

on the application that you deploy.

When you go onto the Configuration for your environment, you will be able to see the Environment type from there

NEW QUESTION 22

You are a Devops Engineer for your company. You are planning on using Cloudwatch for monitoring the resources hosted in AWS. Which of the following can you do with Cloudwatch logs ideally. Choose 3 answers from the options given below

- A. StreamthelogdatatoAmazonKinesisforfurtherprocessing

- B. Sendthe log data to AWS Lambda for custom processing

- C. Streamthe log data into Amazon Elasticsearch for any search analysis required.

- D. Sendthe data to SQS for further processing.

Answer: ABC

Explanation:

Amazon Kinesis can be used for rapid and continuous data intake and aggregation. The type of data used includes IT infrastructure log data, application logs, social media, market data feeds, and web clickstream data Amazon Lambda is a web service which can be used to do serverless computing of the logs which are published by Cloudwatch logs Amazon dasticsearch Service makes it easy to deploy, operate, and scale dasticsearch for log analytics, full text search, application monitoring, and more.

For more information on Cloudwatch logs please see the below link: http://docs.ws.amazon.com/AmazonCloudWatch/latest/logs/WhatlsCloudWatchLogs.html

NEW QUESTION 23

You're building a mobile application game. The application needs permissions for each user to communicate and store data in DynamoDB tables. What is the best method for granting each mobile device that installs your application to access DynamoDB tables for storage when required? Choose the correct answer from the options below

- A. During the install and game configuration process, have each user create an 1AM credential and assign the 1AM user to a group with proper permissions to communicate with DynamoDB.

- B. Create an 1AM group that only gives access to your application and to the DynamoDB table

- C. Then, when writing to DynamoDB, simply include the unique device ID to associate the data with that specific user.

- D. Create an 1AM role with the proper permission policy to communicate with the DynamoDB tabl

- E. Use web identity federation, which assumes the 1AM role using AssumeRoleWithWebldentity, when the user signs in, granting temporary security credentials using STS.

- F. Create an Active Directory server and an AD user for each mobile application use

- G. When the user signs in to the AD sign-on, allow the AD server to federate using SAML 2.0 to 1AM and assign a role to the AD user which is the assumed with AssumeRoleWithSAML

Answer: C

Explanation:

Answer - C

For access to any AWS service, the ideal approach for any application is to use Roles. This is the first preference.

For more information on 1AM policies please refer to the below link:

http://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies.html

Next for any web application, you need to use web identity federation. Hence option D is the right option. This along with the usage of roles is highly stressed in the aws documentation.

The AWS documentation mentions the following

When developing a web application it is recommend not to embed or distribute long-term AWS credentials with apps that a user downloads to a device, even in an encrypted store. Instead, build your app so that it requests temporary AWS security credentials dynamically when needed using web identity federation. The

supplied temporary credentials map to an AWS role that has only the permissions needed to perform the tasks required by the mobile app.

For more information on web identity federation please refer to the below link: http://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_providers_oidc.html

NEW QUESTION 24

......

P.S. Downloadfreepdf.net now are offering 100% pass ensure DOP-C01 dumps! All DOP-C01 exam questions have been updated with correct answers: https://www.downloadfreepdf.net/DOP-C01-pdf-download.html (116 New Questions)