Your success in Amazon-Web-Services DOP-C01 is our sole target and we develop all our DOP-C01 braindumps in a way that facilitates the attainment of this target. Not only is our DOP-C01 study material the best you can find, it is also the most detailed and the most updated. DOP-C01 Practice Exams for Amazon-Web-Services DOP-C01 are written to the highest standards of technical accuracy.

Online Amazon-Web-Services DOP-C01 free dumps demo Below:

NEW QUESTION 1

You have a legacy application running that uses an m4.large instance size and cannot scale with Auto Scaling, but only has peak performance 5% of the time. This is a huge waste of resources and money so your Senior Technical Manager has set you the task of trying to reduce costs while still keeping the legacy application running as it should. Which of the following would best accomplish the task your manager has set you? Choose the correct answer from the options below

- A. Use a T2burstable performance instance.

- B. Use a C4.large instance with enhanced networking.

- C. Use two t2.nano instances that have single Root I/O Visualization.

- D. Use t2.nano instance and add spot instances when they are required.

Answer: A

Explanation:

The aws documentation clearly indicates using T2 CC2 instance types for those instances which don't use CPU that often.

T2

T2 instances are Burstable Performance Instances that provide a baseline level of CPU performance with the ability to burst above the baseline.

T2 Unlimited instances can sustain high CPU performance for as long as a workload needs it. For most general-purpose workloads, T2 Unlimited instances will provide ample performance without any additional charges. If the instance needs to run at higher CPU utilization for a prolonged period, it can also do so at a flat additional charge of 5 cents per vCPU-hour.

The baseline performance and ability to burst are governed by CPU Credits. T2 instances receive CPU Credits continuously at a set rate depending on the instance size, accumulating CPU Credits when they are idle, and consuming CPU credits when they are active. T2 instances are a good choice for a variety of general-purpose workloads including micro-services, low-latency interactive applications, small and medium databases, virtual desktops, development, build and stage environments, code repositories, and product prototypes. For more information see Burstable Performance Instances.

For more information on F_C2 instance types please see the below link: https://aws.amazon.com/ec2/instance-types/

NEW QUESTION 2

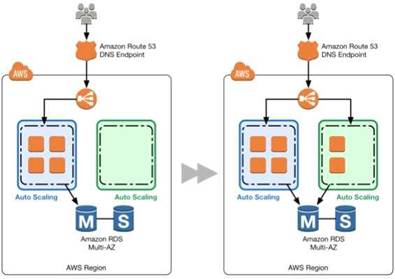

After a daily scrum with your development teams, you've agreed that using Blue/Green style deployments would benefit the team. Which technique should you use to deliver this new requirement?

- A. Re-deploy your application on AWS Elastic Beanstalk, and take advantage of Elastic Beanstalk deployment types.

- B. Using an AWS CloudFormation template, re-deploy your application behind a load balancer, launch a new AWS CloudFormation stack during each deployment, update your load balancer to send half your traffic to the new stack while you test, after verification update the load balancer to send 100% of traffic to the new stack, and then terminate the old stack.

- C. Create a new Autoscaling group with the new launch configuration and desired capacity same as that of the initial Autoscaling group andassociate it with the same load balance

- D. Once the new AutoScaling group's instances got registered with ELB, modify the desired capacity of the initial AutoScal ing group to zero and gradually delete the old Auto scaling grou

- E. •>/

- F. Using an AWS OpsWorks stack, re-deploy your application behind an Elastic Load Balancing load balancer and take advantage of OpsWorks stack versioning, during deployment create a new version of your application, tell OpsWorks to launch the new version behind your load balancer, and when the new version is launched, terminate the old OpsWorks stack.

Answer: C

Explanation:

This is given as a practice in the Green Deployment Guides

A blue group carries the production load while a green group is staged and deployed with the new code. When if s time to deploy, you simply attach the green group to

the existing load balancer to introduce traffic to the new environment. For HTTP/HTTP'S listeners, the load balancer favors the green Auto Scaling group because it uses a least outstanding requests routing algorithm

As you scale up the green Auto Scaling group, you can take blue Auto Scaling group instances out of service by either terminating them or putting them in Standby state.

For more information on Blue Green Deployments, please refer to the below document link: from AWS

https://dOawsstatic.com/whitepapers/AWS_Blue_Green_Deployments.pdf

NEW QUESTION 3

Your company has an on-premise Active Directory setup in place. The company has extended their footprint on AWS, but still want to have the ability to use their on-premise Active Directory for authentication. Which of the following AWS services can be used to ensure that AWS resources such as AWS Workspaces can continue to use the existing credentials stored in the on-premise Active Directory.

- A. Use the Active Directory service on AWS

- B. Use the AWS Simple AD service

- C. Use the Active Directory connector service on AWS

- D. Use the ClassicLink feature on AWS

Answer: C

Explanation:

The AWS Documentation mentions the following

AD Connector is a directory gateway with which you can redirect directory requests to your on- premises Microsoft Active Directory without caching any information

in the cloud. AD Connector comes in two sizes, small and large. A small AD Connector is designed for

smaller organizations of up to 500 users. A large AD Connector can support larger organizations of up to 5,000 users.

For more information on the AD connector, please refer to the below URL: http://docs.aws.amazon.com/directoryservice/latest/admin-guide/directory_ad_connector.html

NEW QUESTION 4

Which of the following services along with Cloudformation helps in building a Continuous Delivery release practice

- A. AWSConfig

- B. AWSLambda

- C. AWSCIoudtrail

- D. AWSCodePipeline

Answer: D

Explanation:

The AWS Documentation mentions

Continuous delivery is a release practice in which code changes are automatically built, tested, and prepared for release to production. With AWS Cloud Formation

and AWS CodePipeline, you can use continuous delivery to automatically build and test changes to your AWS Cloud Formation templates before promoting them to

production stacks. This release process lets you rapidly and reliably make changes to your AWS infrastructure.

For more information on Continuous Delivery, please visit the below URL: http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/continuous-delivery-codepipeline.html

NEW QUESTION 5

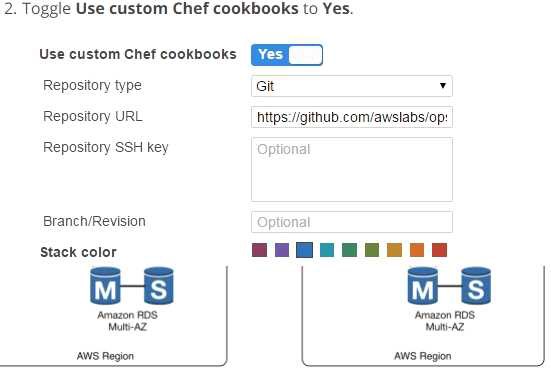

You are working with a customer who is using Chef Configuration management in their data center. Which service is designed to let the customer leverage existing Chef recipes in AWS?

- A. AmazonSimple Workflow Service

- B. AWSEIastic Beanstalk

- C. AWSCIoudFormation

- D. AWSOpsWorks

Answer: D

Explanation:

AWS OpsWorks is a configuration management service that helps you configure and operate applications of all shapes and sizes using Chef. You can define the application's architecture and the specification of each component including package installation, software configuration and resources

such as storage. Start from templates for common technologies like application servers and databases or build your own to perform any task that can be scripted. AWS OpsWorks includes automation to scale your application based on time or load and dynamic configuration to orchestrate changes as your environment scales.

For more information on Opswork, please visit the link:

• https://aws.amazon.com/opsworks/

NEW QUESTION 6

Your application consists of 10% writes and 90% reads. You currently service all requests through a Route53 Alias Record directed towards an AWS ELB, which sits in front of an EC2 Auto Scaling Group. Your system isgetting very expensive when there are large traffic spikes during certain news events, during which many more people request to read similar data all at the same time. What is the simplest and cheapest way to reduce costs and scale with spikes like this?

- A. Create an S3 bucket and asynchronously replicate common requests responses into S3 object

- B. When a request comes in for a precomputed response, redirect to AWS S3.

- C. Create another ELB and Auto Scaling Group layer mounted on top of the other system, adding a tier to the syste

- D. Serve most read requests out of the top layer.

- E. Create a CloudFront Distribution and direct Route53 to the Distributio

- F. Use the ELB as an Origin and specify Cache Behaviours to proxy cache requests which can be served late.

- G. Create a Memcached cluster in AWS ElastiCach

- H. Create cache logic to serve requests which can be served late from the in-memory cache for increased performance.

Answer: C

Explanation:

Use Cloudf rant distribution for distributing the heavy reads for your application. You can create a

zone apex record to point to the Cloudfront distribution.

You can control how long your objects stay in a CloudFront cache before CloudFront forwards another request to your origin. Reducing the duration allows you to serve dynamic content. Increasing the duration means your users get better performance because your objects are more likely to be served directly from the edge cache. A longer duration also reduces the load on your origin.

For more information on Cloudfront object expiration, please visit the below URL: http://docs.aws.amazon.com/AmazonCloudFrant/latest/DeveloperGuide/Cxpiration.html

NEW QUESTION 7

Your company is planning to develop an application in which the front end is in .Net and the backend is in DynamoDB. There is an expectation of a high load on the application. How could you ensure the scalability of the application to reduce the load on the DynamoDB database? Choose an answer from the options below.

- A. Add more DynamoDB databases to handle the load.

- B. Increase write capacity of Dynamo DB to meet the peak loads

- C. Use SQS to assist and let the application pull messages and then perform the relevant operation in DynamoDB.

- D. Launch DynamoDB in Multi-AZ configuration with a global index to balance writes

Answer: C

Explanation:

When the idea comes for scalability then SQS is the best option. Normally DynamoDB is scalable, but since one is looking for a cost effective solution, the messaging in SQS can assist in managing the situation mentioned in the question.

Amazon Simple Queue Service (SQS) is a fully-managed message queuing service for reliably communicating among distributed software components and microservices - at any scale. Building applications from individual components that each perform a discrete function improves scalability and reliability, and is best practice design for modern applications. SQS makes it simple and cost- effective to decouple and coordinate the components of a cloud application. Using SQS, you can send, store, and receive messages between software components at any volume, without losing messages or requiring other services to be always available

For more information on SQS, please refer to the below URL:

• https://aws.amazon.com/sqs/

NEW QUESTION 8

You have instances running on your VPC. You have both production and development based instances running in the VPC. You want to ensure that people who are responsible for the development instances don't have the access to work on the production instances to ensure better security. Using policies, which of the following would be the best way to accomplish this? Choose the correct answer from the options given below

- A. Launchthe test and production instances in separate VPC's and use VPC peering

- B. Createan 1AM policy with a condition which allows access to only instances that areused for production or development

- C. Launchthe test and production instances in different Availability Zones and use MultiFactor Authentication

- D. Definethe tags on the test and production servers and add a condition to the lAMpolicy which allows access to specific tags

Answer: D

Explanation:

You can easily add tags which define which instances are production and which are development instances and then ensure these tags are used when controlling access via an 1AM policy.

For more information on tagging your resources, please refer to the below link: http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/Using_Tags.html

NEW QUESTION 9

You've created a Cloudformation template as per your team's requets which is required for testing an application. By there is a request that when the stack is deleted, that the database is preserved for future reference. How can you achieve this using Cloudformation?

- A. Ensurethat the RDS is created with Read Replica's so that the Read Replica remainsafter the stack is torn down.

- B. IntheAWSCIoudFormation template, set the DeletionPolicy of theAWS::RDS::DBInstance'sDeletionPolicy property to "Retain."

- C. Inthe AWS CloudFormation template, set the WaitPolicy of the AWS::RDS::DBInstance'sWaitPolicy property to "Retain."

- D. Inthe AWS CloudFormation template, set the AWS::RDS::DBInstance'sDBInstanceClassproperty to be read-only.

Answer: B

Explanation:

With the Deletion Policy attribute you can preserve or (in some cases) backup a resource when its stack is deleted. You specify a DeletionPolicy attribute for each resource that you want to control. If a resource has no DeletionPolicy attribute, AWS Cloud Formation deletes the resource by default. Note that this capability also applies to update operations that lead to resources being removed.

For more information on Cloudformation Deletion policy, please visit the below URL:

◆ http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/aws-attribute-deletionpolicy.html

NEW QUESTION 10

You currently have EC2 Instances hosting an application. These instances are part of an Autoscaling Group. You now want to change the instance type of the EC2 Instances. How can you manage the deployment with the least amount of downtime

- A. Terminate the existing Auto Scalinggrou

- B. Create a new launch configuration with the new Instance typ

- C. Attach that to the new Autoscaing Group.

- D. Use the AutoScalingRollingUpdate policy on CloudFormation Template Auto Scalinggroup

- E. Use the Rolling Update feature which is available for EC2 Instances.

- F. Manually terminate the instances, launch new instances with the new instance type and attach them to the Autoscaling group

Answer: B

Explanation:

The AWS::AutoScaling::AutoScalingGroup resource supports an UpdatePolicy attribute. This is used to define how an Auto Scalinggroup resource is updated when

an update to the Cloud Formation stack occurs. A common approach to updating an Auto Scaling group is to perform a rolling update, which is done by specifying the

AutoScalingRollingUpdate policy. This retains the same Auto Scalinggroup and replaces old instances with new ones, according to the parameters specified.

For more information on AutoScaling Rolling Update, please refer to the below link:

• https://aws.amazon.com/premiumsupport/knowledge-center/auto-scaling-group-rolling- updates/

NEW QUESTION 11

You have a complex system that involves networking, 1AM policies, and multiple, three-tier applications. You are still receiving requirements for the new system, so you don't yet know how many AWS components will be present in the final design. You want to start using AWS CloudFormation to define these AWS resources so that you can automate and version-control your infrastructure. How would you use AWS CloudFormation to provide agile new environments for your customers in a cost-effective, reliable manner?

- A. Manually create one template to encompass all the resources that you need for the system, so you only have a single template to version-control.

- B. Create multiple separate templates for each logical part of the system, create nested stacks in AWS CloudFormation, and maintain several templates to version-contro

- C. •>/

- D. Create multiple separate templates for each logical part of the system, and provide the outputs from one to the next using an Amazon Elastic Compute Cloud (EC2) instance running the SDK forfinergranularity of control.

- E. Manually construct the networking layer using Amazon Virtual Private Cloud (VPC) because this does not change often, and then use AWS CloudFormation to define all other ephemeral resources.

Answer: B

Explanation:

As your infrastructure grows, common patterns can emerge in which you declare the same components in each of your templates. You can separate out these common components and create dedicated templates for them. That way, you can mix and match different templates but use nested stacks to create a single, unified stack. Nested stacks are stacks that create other stacks. To create nested stacks, use the AWS::CloudFormation::Stackresource in your template to reference other templates.

For more information on Cloudformation best practises please refer to the below link: http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/best-practices.html

NEW QUESTION 12

You have carried out a deployment using Elastic Beanstalk with All at once method, but the application is unavailable. What could be the reason for this

- A. You need to configure ELB along with Elastic Beanstalk

- B. You need to configure Route53 along with Elastic Beanstalk

- C. There will always be a few seconds of downtime before the application is available

- D. The cooldown period is not properly configured for Elastic Beanstalk

Answer: C

Explanation:

The AWS Documentation mentions

Because Elastic Beanstalk uses a drop-in upgrade process, there might be a few seconds of downtime. Use rolling deployments to minimize the effect of deployments on your production environments.

For more information on troubleshooting Elastic Beanstalk, please refer to the below link:

• http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/troubleshooting-deployments.html

• https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/using-features.de ploy-existing- version, html

NEW QUESTION 13

You have been tasked with deploying a scalable distributed system using AWS OpsWorks. Your distributed system is required to scale on demand. As it is distributed, each node must hold a configuration file that includes the hostnames of the other instances within the layer. How should you configure AWS OpsWorks to manage scaling this application dynamically?

- A. Create a Chef Recipe to update this configuration file, configure your AWS OpsWorks stack to use custom cookbooks, and assign this recipe to the Configure LifeCycle Event of the specific layer.

- B. Update this configuration file by writing a script to poll the AWS OpsWorks service API for new instance

- C. Configure your base AMI to execute this script on Operating System startup.

- D. Create a Chef Recipe to update this configuration file, configure your AWS OpsWorks stack to use custom cookbooks, and assign this recipe to execute when instances are launched.

- E. Configure your AWS OpsWorks layer to use the AWS-provided recipe for distributed host configuration, and configure the instance hostname and file path parameters in your recipes settings.

Answer: A

Explanation:

Please check the following AWS DOCs which provides details on the scenario. Check the example of "configure".

◆ https://docs.aws.amazon.com/opsworks/latest/userguide/workingcookbook-events.html You can use the Configure Lifecycle event

This event occurs on all of the stack's instances when one of the following occurs:

• An instance enters or leaves the online state.

• You associate an Elastic IP address with an instance or disassociate one from an instance.

• You attach an Elastic Load Balancing load balancer to a layer, or detach one from a layer. Ensure the Opswork layer uses a custom Cookbook

For more information on Opswork stacks, please refer to the below document link: from AWS

• http://docs.aws.a mazon.com/opsworks/latest/userguide/welcome_classic.html

NEW QUESTION 14

You work for a startup that has developed a new photo-sharing application for mobile devices. Over recent months your application has increased in popularity; this has resulted in a decrease in the performance of the application clue to the increased load. Your application has a two-tier architecture that is composed of an Auto Scaling PHP application tier and a MySQL RDS instance initially deployed with AWS Cloud Formation. Your Auto Scaling group has a min value of 4 and a max value of 8. The desired capacity is now at 8 because of the high CPU utilization of the instances. After some analysis, you are confident that the performance issues stem from a constraint in CPU capacity, although memory utilization remains low. You therefore decide to move from the general-purpose M3 instances to the compute-optimized C3 instances. How would you deploy this change while minimizing any interruption to your end users?

- A. Sign into the AWS Management Console, copy the old launch configuration, and create a new launch configuration that specifies the C3 instance

- B. Update the Auto Scalinggroup with the new launch configuratio

- C. Auto Scaling will then update the instance type of all running instances.

- D. Sign into the AWS Management Console, and update the existing launch configuration with the new C3 instance typ

- E. Add an UpdatePolicy attribute to your Auto Scaling group that specifies AutoScalingRollingUpdate.

- F. Update the launch configuration specified in the AWS CloudFormation template with the new C3 instance typ

- G. Run a stack update with the new templat

- H. Auto Scaling will then update the instances with the new instance type.

- I. Update the launch configuration specified in the AWS CloudFormation template with the new C3instance typ

- J. Also add an UpdatePolicy attribute to your Auto Scalinggroup that specifies AutoScalingRollingUpdat

- K. Run a stack update with the new template.

Answer: D

Explanation:

The AWS::AutoScaling::AutoScalingGroup resource supports an UpdatePoIicy attribute. This is used to define how an Auto Scalinggroup resource is updated when an update to the Cloud Formation stack occurs. A common approach to updating an Auto Scaling group is to perform a rolling update, which is done by specifying the AutoScalingRollingUpdate policy. This retains the same Auto Scaling group and replaces old instances with new ones, according to the parameters specified. For more information on rolling updates, please visit the below link:

• https://aws.amazon.com/premiumsupport/knowledge-center/auto-scaling-group-rolling- updates/

NEW QUESTION 15

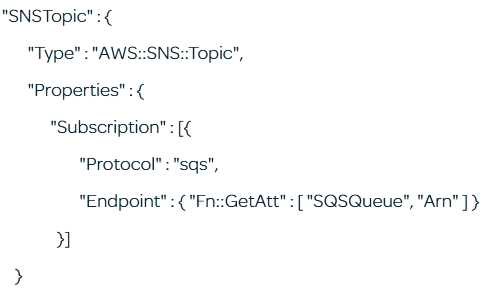

Explain what the following resource in a CloudFormation template does? Choose the best possible answer.

- A. Createsan SNS topic which allows SQS subscription endpoints to be added as a parameteron thetemplate

- B. Createsan SNS topic that allow SQS subscription endpoints

- C. Createsan SNS topic and then invokes the call to create an SQS queue with a logicalresource name of SQSQueue

- D. Creates an SNS topic and adds asubscription ARN endpoint for the SQS resource created under the logical nameSQSQueue

Answer: D

Explanation:

The intrinsic function Fn::GetAtt returns the value of an attribute from a resource in the template. This has nothing to do with adding parameters (Option A is wrong) or allowing endpoints (Option B is wrong) or invoking relevant calls (Option C is wrong)

For more information on Fn:: GetAtt function please refer to the below link

http://docs.aws.a mazon.com/AWSCIoudFormation/latest/UserGuide/intrinsic-function -reference- getatt.htm I

NEW QUESTION 16

A user is using Cloudformation to launch an EC2 instance and then configure an application after the instance is launched. The user wants the stack creation of ELB and AutoScaling to wait until the EC2 instance is launched and configured properly. How can the user configure this?

- A. It is not possible that the stackcreation will wait until one service is created and launchedB.The user can use theHoldCondition resource to wait for the creation of the other dependent resources

- B. The user can use theDependentCondition resource to hold the creation of the other dependentresources

- C. The user can use the WaitConditionresource to hold the creation of the other dependent resources

Answer: D

Explanation:

You can use a wait condition for situations like the following:

To coordinate stack resource creation with configuration actions that are external to the stack creation

To track the status of a configuration process

For more information on Cloudformation Wait condition please visit the link

http://docs^ws.amazon.com/AWSCIoudFormation/latest/UserGuide/aws-properties-waitcondition.htmI

NEW QUESTION 17

Which of the following features of the Autoscaling Group ensures that additional instances are neither launched or terminated before the previous scaling activity takes effect

- A. Termination policy

- B. Cool down period

- C. Ramp up period

- D. Creation policy

Answer: B

Explanation:

The AWS documentation mentions

The Auto Scaling cooldown period is a configurable setting for your Auto Scaling group that helps to ensure that Auto Scaling doesn't launch or terminate additional

instances before the previous scaling activity takes effect. After the Auto Scaling group dynamically scales using a simple scaling policy. Auto Scaling waits for the

cooldown period to complete before resuming scaling activities. When you manually scale your Auto Scaling group, the default is not to wait for the cooldown period,

but you can override the default and honor the cooldown period. If an instance becomes unhealthy.

Auto Scaling does not wait for the cooldown period to complete before replacing the unhealthy instance

For more information on the Cool down period, please refer to the below URL:

• http://docs.ws.amazon.com/autoscaling/latest/userguide/Cooldown.htmI

NEW QUESTION 18

If I want Cloud Formation stack status updates to show up in a continuous delivery system in as close to real time as possible, how should I achieve this?

- A. Use a long-poll on the Resources object in your Cloud Formation stack and display those state changes in the Ul for the system.

- B. Use a long-poll on the ListStacksAPI call for your CloudFormation stack and display those state changes in the Ul for the system.

- C. Subscribe your continuous delivery system to an SNS topic that you also tell your CloudFormation stack to publish events int

- D. Subscribe your continuous delivery system to an SQS queue that you also tell your CloudFormation stack to publish events into.

Answer: C

Explanation:

Answer - C

You can monitor the progress of a stack update by viewing the stack's events. The console's Cvents tab displays each major step in the creation and update of the stack sorted by the time of each event with latest events on top. The start of the stack update process is marked with an UPDATE_IN_PROGRCSS event for the stack For more information on Monitoring your stack, please visit the below URL:

http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/using-cfn-updating-stacks- monitor-stack. html

NEW QUESTION 19

Which of these is not an instrinsic function in AWS CloudFormation?

- A. Fn::Equals

- B. Fn::lf

- C. Fn::Not

- D. Fn::Parse

Answer: D

Explanation:

You can use intrinsic functions, such as Fn::lf, Fn::Cquals, and Fn::Not, to conditionally create stack resources. These conditions are evaluated based on input parameters that you declare when you create or update a stack. After you define all your conditions, you can associate them with resources or resource properties in the Resources and Outputs sections of a template.

For more information on Cloud Formation template functions, please refer to the URL:

• http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/intrinsic-function- reference.html and

• http://docs.aws.a mazon.com/AWSCIoudFormation/latest/UserGuide/intri nsic-function- reference-conditions.html

NEW QUESTION 20

You run accounting software in the AWS cloud. This software needs to be online continuously during the day every day of the week, and has a very static requirement for compute resources. You also have other, unrelated batch jobs that need to run once per day at anytime of your choosing. How should you minimize cost?

- A. Purchase a Heavy Utilization Reserved Instance to run the accounting softwar

- B. Turn it off after hour

- C. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- D. Purch ase a Medium Utilization Reserved Instance to run the accounting softwar

- E. Turn it off after hour

- F. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- G. Purchase a Light Utilization Reserved Instance to run the accounting softwar

- H. Turn it off after hour

- I. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- J. Purch ase a Full Utilization Reserved Instance to run the accounting softwar

- K. Turn it off after hour

- L. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

Answer: A

Explanation:

Reserved Instances provide you with a significant discount compared to On-Demand Instance pricing.

Reserved Instances are not physical instances, but rather a

billing discount applied to the use of On-Demand Instances in your account. These On-Demand Instances must match certain attributes in order to benefit from the

billing discount

For more information, please refer to the below link:

• https://aws.amazon.com/about-aws/whats-new/2011/12/01/New-Amazon-CC2-Reserved- lnstances-Options-Now-Available/

• https://aws.amazon.com/blogs/aws/reserved-instance-options-for-amazon-ec2/

• http://docs.aws.a mazon.com/AWSCC2/latest/UserGuide/ec2-reserved-instances.html Note:

It looks like these options are also no more available at present.

It looks like Convertible, Standard and scheduled are the new instance options. However the exams may still be referring to the old RIs. https://aws.amazon.com/ec2/pricing/reserved-instances/

NEW QUESTION 21

You have a development team that is continuously spending a lot of time rolling back updates for an application. They work on changes, and if the change fails, they spend more than 5-6h in rolling back the update. Which of the below options can help reduce the time for rolling back application versions.

- A. Use Elastic Beanstalk and re-deploy using Application Versions

- B. Use S3 to store each version and then re-deploy with Elastic Beanstalk

- C. Use CloudFormation and update the stack with the previous template

- D. Use OpsWorks and re-deploy using rollback feature.

Answer: A

Explanation:

Option B is invalid because Clastic Beanstalk already has the facility to manage various versions and you don't need to use S3 separately for this.

Option C is invalid because in Cloudformation you will need to maintain the versions. Clastic Beanstalk can so that automatically for you.

Option D is good for production scenarios and Clastic Beanstalk is great for development scenarios. AWS beanstalk is the perfect solution for developers to maintain application versions.

With AWS Clastic Beanstalk, you can quickly deploy and manage applications in the AWS Cloud without worrying about the infrastructure that runs those

applications. AWS Clastic Beanstalk reduces management complexity without restricting choice or control. You simply upload your application, and AWS Clastic

Beanstalk automatically handles the details of capacity provisioning, load balancing, scaling, and application health monitoring.

For more information on AWS Beanstalk please refer to the below link: https://aws.amazon.com/documentation/elastic-beanstalk/

NEW QUESTION 22

A vendor needs access to your AWS account. They need to be able to read protected messages in a private S3 bucket. They have a separate AWS account. Which of the solutions below is the best way to do this?

- A. Allowthe vendor to ssh into your EC2 instance and grant them an 1AM role with fullaccess to the bucket.

- B. Createa cross-account 1AM role with permission to access the bucket, and grantpermission to use the role to the vendor AWS account.

- C. Createan 1AM User with API Access Key

- D. Give the vendor the AWS Access Key ID and AWSSecret Access Key for the user.

- E. Createan S3 bucket policy that allows the vendor to read from the bucket from theirAWS account.

Answer: B

Explanation:

The AWS Documentation mentions the following on cross account roles

You can use AWS Identity and Access Management (I AM) roles and AWS Security Token Service (STS) to set up cross-account access between AWS accounts. When you assume an 1AM role in another AWS account to obtain cross-account access to services and resources in that account, AWS CloudTrail logs the cross-account activity. For more information on Cross account roles, please visit the below URL

http://docs.aws.amazon.com/IAM/latest/UserGuide/tuto rial_cross-account-with-roles.htm I https://docs.aws.amazon.com/AmazonS3/latest/dev/example-walkthroughs-managing-access- example2.html

NEW QUESTION 23

You are planning on configuring logs for your Elastic Load balancer. At what intervals does the logs get produced by the Elastic Load balancer service. Choose 2 answers from the options given below

- A. 5minutes

- B. 60minutes

- C. 1 minute

- D. 30seconds

Answer: AB

Explanation:

The AWS Documentation mentions

Clastic Load Balancing publishes a log file for each load balancer node at the interval you specify. You can specify a publishing interval of either 5 minutes or 60 minutes when you enable the access log for your load balancer. By default. Elastic Load Balancing publishes logs at a 60-minute interval.

For more information on Elastic load balancer logs please see the below link: http://docs.aws.amazon.com/elasticloadbalancing/latest/classic/access-log-collection.html

NEW QUESTION 24

......

P.S. Certshared now are offering 100% pass ensure DOP-C01 dumps! All DOP-C01 exam questions have been updated with correct answers: https://www.certshared.com/exam/DOP-C01/ (116 New Questions)