It is impossible to pass Microsoft DP-201 exam without any help in the short term. Come to Actualtests soon and find the most advanced, correct and guaranteed Microsoft DP-201 practice questions. You will get a surprising result by our Abreast of the times Designing an Azure Data Solution practice guides.

Also have DP-201 free dumps questions for you:

NEW QUESTION 1

HOTSPOT

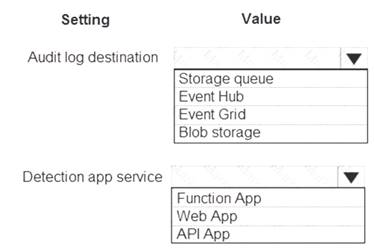

You need to ensure that security policies for the unauthorized detection system are met. What should you recommend? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

Box 1: Blob storage

Configure blob storage for audit logs.

Scenario: Unauthorized usage of the Planning Assistance data must be detected as quickly as possible. Unauthorized usage is determined by looking for an unusual pattern of usage.

Data used for Planning Assistance must be stored in a sharded Azure SQL Database. Box 2: Web Apps

SQL Advanced Threat Protection (ATP) is to be used.

One of Azure’s most popular service is App Service which enables customers to build and host web applications in the programming language of their choice without managing infrastructure. App Service offers auto-scaling and high availability, supports both Windows and Linux. It also supports automated deployments from GitHub, Visual Studio Team Services or any Git repository. At RSA, we announced that Azure Security Center leverages the scale of the cloud to identify attacks targeting App Service applications.

References:

https://azure.microsoft.com/sv-se/blog/azure-security-center-can-identify-attacks-targeting-azure-app-service-ap

NEW QUESTION 2

You need to optimize storage for CONT_SQL3. What should you recommend?

- A. AlwaysOn

- B. Transactional processing

- C. General

- D. Data warehousing

Answer: B

Explanation:

CONT_SQL3 with the SQL Server role, 100 GB database size, Hyper-VM to be migrated to Azure VM. The storage should be configured to optimized storage for database OLTP workloads.

Azure SQL Database provides three basic in-memory based capabilities (built into the underlying database engine) that can contribute in a meaningful way to performance improvements:

In-Memory Online Transactional Processing (OLTP)

Clustered columnstore indexes intended primarily for Online Analytical Processing (OLAP) workloads Nonclustered columnstore indexes geared towards Hybrid Transactional/Analytical Processing (HTAP) workloads

References:

https://www.databasejournal.com/features/mssql/overview-of-in-memory-technologies-of-azure-sqldatabase.htm

NEW QUESTION 3

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

A company is developing a solution to manage inventory data for a group of automotive repair shops. The

solution will use Azure SQL Data Warehouse as the data store. Shops will upload data every 10 days.

Data corruption checks must run each time data is uploaded. If corruption is detected, the corrupted data must be removed.

You need to ensure that upload processes and data corruption checks do not impact reporting and analytics processes that use the data warehouse.

Proposed solution: Insert data from shops and perform the data corruption check in a transaction. Rollback transfer if corruption is detected.

Does the solution meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

Instead, create a user-defined restore point before data is uploaded. Delete the restore point after data corruption checks complete.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/backup-and-restore

NEW QUESTION 4

A company purchases loT devices to monitor manufacturing machinery. The company uses an loT appliance to communicate with the loT devices.

The company must be able to monitor the devices in real-time. You need to design the solution.

What should you recommend?

- A. Azure Stream Analytics cloud job using Azure PowerShell

- B. Azure Analysis Services using Azure Portal

- C. Azure Data Factory instance using Azure Portal

- D. Azure Analysis Services using Azure PowerShell

Answer: D

NEW QUESTION 5

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are designing an HDInsight/Hadoop cluster solution that uses Azure Data Lake Gen1 Storage. The solution requires POSIX permissions and enables diagnostics logging for auditing.

You need to recommend solutions that optimize storage.

Proposed Solution: Ensure that files stored are smaller than 250MB. Does the solution meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

Ensure that files stored are larger, not smaller than 250MB.

You can have a separate compaction job that combines these files into larger ones.

Note: The file POSIX permissions and auditing in Data Lake Storage Gen1 comes with an overhead that becomes apparent when working with numerous small files. As a best practice, you must batch your data into larger files versus writing thousands or millions of small files to Data Lake Storage Gen1. Avoiding small file sizes can have multiple benefits, such as:

Lowering the authentication checks across multiple files Reduced open file connections

Faster copying/replication

Fewer files to process when updating Data Lake Storage Gen1 POSIX permissions References:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-best-practices

NEW QUESTION 6

You need to design the solution for analyzing customer data. What should you recommend?

- A. Azure Databricks

- B. Azure Data Lake Storage

- C. Azure SQL Data Warehouse

- D. Azure Cognitive Services

- E. Azure Batch

Answer: A

Explanation:

Customer data must be analyzed using managed Spark clusters. You create spark clusters through Azure Databricks. References:

https://docs.microsoft.com/en-us/azure/azure-databricks/quickstart-create-databricks-workspace-portal

NEW QUESTION 7

You need to recommend an Azure SQL Database pricing tier for Planning Assistance. Which pricing tier should you recommend?

- A. Business critical Azure SQL Database single database

- B. General purpose Azure SQL Database Managed Instance

- C. Business critical Azure SQL Database Managed Instance

- D. General purpose Azure SQL Database single database

Answer: B

Explanation:

Azure resource costs must be minimized where possible.

Data used for Planning Assistance must be stored in a sharded Azure SQL Database. The SLA for Planning Assistance is 70 percent, and multiday outages are permitted.

NEW QUESTION 8

A company is designing a solution that uses Azure Databricks.

The solution must be resilient to regional Azure datacenter outages. You need to recommend the redundancy type for the solution. What should you recommend?

- A. Read-access geo-redundant storage

- B. Locally-redundant storage

- C. Geo-redundant storage

- D. Zone-redundant storage

Answer: C

Explanation:

If your storage account has GRS enabled, then your data is durable even in the case of a complete regional outage or a disaster in which the primary region isn’t recoverable.

References:

https://medium.com/microsoftazure/data-durability-fault-tolerance-resilience-in-azure-databricks- 95392982bac7

NEW QUESTION 9

You are designing an Azure Databricks interactive cluster.

You need to ensure that the cluster meets the following requirements: Enable auto-termination

Retain cluster configuration indefinitely after cluster termination. What should you recommend?

- A. Start the cluster after it is terminated.

- B. Pin the cluster

- C. Clone the cluster after it is terminated.

- D. Terminate the cluster manually at process completion.

Answer: B

Explanation:

To keep an interactive cluster configuration even after it has been terminated for more than 30 days, an administrator can pin a cluster to the cluster list.

References:

https://docs.azuredatabricks.net/user-guide/clusters/terminate.html

NEW QUESTION 10

You need to design the runtime environment for the Real Time Response system. What should you recommend?

- A. General Purpose nodes without the Enterprise Security package

- B. Memory Optimized Nodes without the Enterprise Security package

- C. Memory Optimized nodes with the Enterprise Security package

- D. General Purpose nodes with the Enterprise Security package

Answer: B

NEW QUESTION 11

You need to design the SensorData collection.

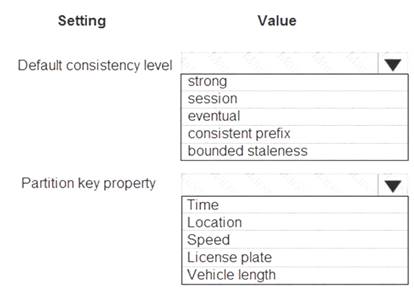

What should you recommend? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

- A. Mastered

- B. Not Mastered

Answer: A

Explanation:

Box 1: Eventual

Traffic data insertion rate must be maximized.

Sensor data must be stored in a Cosmos DB named treydata in a collection named SensorData

With Azure Cosmos DB, developers can choose from five well-defined consistency models on the consistency spectrum. From strongest to more relaxed, the models include strong, bounded staleness, session, consistent prefix, and eventual consistency.

Box 2: License plate

This solution reports on all data related to a specific vehicle license plate. The report must use data from the SensorData collection.

References:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

NEW QUESTION 12

You are designing a real-time stream solution based on Azure Functions. The solution will process data uploaded to Azure Blob Storage.

The solution requirements are as follows:

New blobs must be processed with a little delay as possible. Scaling must occur automatically.

Costs must be minimized. What should you recommend?

- A. Deploy the Azure Function in an App Service plan and use a Blob trigger.

- B. Deploy the Azure Function in a Consumption plan and use an Event Grid trigger.

- C. Deploy the Azure Function in a Consumption plan and use a Blob trigger.

- D. Deploy the Azure Function in an App Service plan and use an Event Grid trigger.

Answer: C

Explanation:

Create a function, with the help of a blob trigger template, which is triggered when files are uploaded to or updated in Azure Blob storage.

You use a consumption plan, which is a hosting plan that defines how resources are allocated to your function app. In the default Consumption Plan, resources are added dynamically as required by your functions. In this serverless hosting, you only pay for the time your functions run. When you run in an App Service plan, you must manage the scaling of your function app.

References:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-create-storage-blob-triggered-function

NEW QUESTION 13

A company installs IoT devices to monitor its fleet of delivery vehicles. Data from devices is collected from Azure Event Hub.

The data must be transmitted to Power BI for real-time data visualizations. You need to recommend a solution.

What should you recommend?

- A. Azure HDInsight with Spark Streaming

- B. Apache Spark in Azure Databricks

- C. Azure Stream Analytics

- D. Azure HDInsight with Storm

Answer: C

Explanation:

Step 1: Get your IoT hub ready for data access by adding a consumer group.

Step 2: Create, configure, and run a Stream Analytics job for data transfer from your IoT hub to your Power BI account.

Step 3: Create and publish a Power BI report to visualize the data. References:

https://docs.microsoft.com/en-us/azure/iot-hub/iot-hub-live-data-visualization-in-power-bi

NEW QUESTION 14

You need to recommend a backup strategy for CONT_SQL1 and CONT_SQL2. What should you recommend?

- A. Use AzCopy and store the data in Azure.

- B. Configure Azure SQL Database long-term retention for all databases.

- C. Configure Accelerated Database Recovery.

- D. Use DWLoader.

Answer: B

Explanation:

Scenario: The database backups have regulatory purposes and must be retained for seven years.

NEW QUESTION 15

You are evaluating data storage solutions to support a new application.

You need to recommend a data storage solution that represents data by using nodes and relationships in graph structures.

Which data storage solution should you recommend?

- A. Blob Storage

- B. Cosmos DB

- C. Data Lake Store

- D. HDInsight

Answer: B

Explanation:

For large graphs with lots of entities and relationships, you can perform very complex analyses very quickly. Many graph databases provide a query language that you can use to traverse a network of relationships efficiently.

Relevant Azure service: Cosmos DB

References:

https://docs.microsoft.com/en-us/azure/architecture/guide/technology-choices/data-store-overview

NEW QUESTION 16

You have an on-premises MySQL database that is 800 GB in size.

You need to migrate a MySQL database to Azure Database for MySQL. You must minimize service interruption to live sites or applications that use the database.

What should you recommend?

- A. Azure Database Migration Service

- B. Dump and restore

- C. Import and export

- D. MySQL Workbench

Answer: A

Explanation:

You can perform MySQL migrations to Azure Database for MySQL with minimal downtime by using the newly introduced continuous sync capability for the Azure Database Migration Service (DMS). This functionality limits the amount of downtime that is incurred by the application. References:

https://docs.microsoft.com/en-us/azure/mysql/howto-migrate-online

NEW QUESTION 17

You are designing a data processing solution that will implement the lambda architecture pattern. The solution will use Spark running on HDInsight for data processing.

You need to recommend a data storage technology for the solution.

Which two technologies should you recommend? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- A. Azure Cosmos DB

- B. Azure Service Bus

- C. Azure Storage Queue

- D. Apache Cassandra

- E. Kafka HDInsight

Answer: AE

Explanation:

To implement a lambda architecture on Azure, you can combine the following technologies to accelerate realtime big data analytics:

Azure Cosmos DB, the industry's first globally distributed, multi-model database service.

Apache Spark for Azure HDInsight, a processing framework that runs large-scale data analytics applications

Azure Cosmos DB change feed, which streams new data to the batch layer for HDInsight to process The Spark to Azure Cosmos DB Connector

E: You can use Apache Spark to stream data into or out of Apache Kafka on HDInsight using DStreams. References:

https://docs.microsoft.com/en-us/azure/cosmos-db/lambda-architecture

NEW QUESTION 18

You are designing an application. You plan to use Azure SQL Database to support the application.

The application will extract data from the Azure SQL Database and create text documents. The text documents will be placed into a cloud-based storage solution. The text storage solution must be accessible from an SMB network share.

You need to recommend a data storage solution for the text documents. Which Azure data storage type should you recommend?

- A. Queue

- B. Files

- C. Blob

- D. Table

Answer: B

Explanation:

Azure Files enables you to set up highly available network file shares that can be accessed by using the standard Server Message Block (SMB) protocol.

References:

https://docs.microsoft.com/en-us/azure/storage/common/storage-introduction https://docs.microsoft.com/en-us/azure/storage/tables/table-storage-overview

NEW QUESTION 19

You need to design the unauthorized data usage detection system. What Azure service should you include in the design?

- A. Azure Databricks

- B. Azure SQL Data Warehouse

- C. Azure Analysis Services

- D. Azure Data Factory

Answer: B

NEW QUESTION 20

You are designing an Azure SQL Data Warehouse. You plan to load millions of rows of data into the data warehouse each day.

You must ensure that staging tables are optimized for data loading. You need to design the staging tables.

What type of tables should you recommend?

- A. Round-robin distributed table

- B. Hash-distributed table

- C. Replicated table

- D. External table

Answer: A

Explanation:

To achieve the fastest loading speed for moving data into a data warehouse table, load data into a staging table. Define the staging table as a heap and use round-robin for the distribution option.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

NEW QUESTION 21

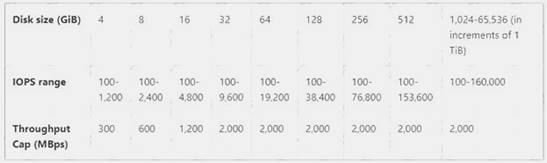

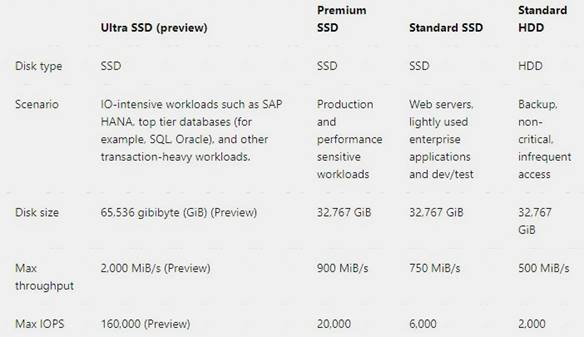

You need to design a solution to meet the SQL Server storage requirements for CONT_SQL3. Which type of disk should you recommend?

- A. Standard SSD Managed Disk

- B. Premium SSD Managed Disk

- C. Ultra SSD Managed Disk

Answer: C

Explanation:

CONT_SQL3 requires an initial scale of 35000 IOPS.

The following table provides a comparison of ultra solid-state-drives (SSD) (preview), premium SSD, standard SSD, and standard hard disk drives (HDD) for managed disks to help you decide what to use.

References:

https://docs.microsoft.com/en-us/azure/virtual-machines/windows/disks-types

NEW QUESTION 22

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are designing an Azure SQL Database that will use elastic pools. You plan to store data about customers in a table. Each record uses a value for CustomerID.

You need to recommend a strategy to partition data based on values in CustomerID. Proposed Solution: Separate data into customer regions by using horizontal partitioning. Does the solution meet the goal?

- A. Yes

- B. No

Answer: B

Explanation:

We should use Horizontal Partitioning through Sharding, not divide through regions.

Note: Horizontal Partitioning - Sharding: Data is partitioned horizontally to distribute rows across a scaled out data tier. With this approach, the schema is identical on all participating databases. This approach is also called “sharding”. Sharding can be performed and managed using (1) the elastic database tools libraries or (2)

self-sharding. An elastic query is used to query or compile reports across many shards.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-query-overview

NEW QUESTION 23

......

Thanks for reading the newest DP-201 exam dumps! We recommend you to try the PREMIUM Thedumpscentre.com DP-201 dumps in VCE and PDF here: https://www.thedumpscentre.com/DP-201-dumps/ (74 Q&As Dumps)