It is more faster and easier to pass the Amazon-Web-Services MLS-C01 exam by using Approved Amazon-Web-Services AWS Certified Machine Learning - Specialty questuins and answers. Immediate access to the Improve MLS-C01 Exam and find the same core area MLS-C01 questions with professionally verified answers, then PASS your exam with a high score now.

Online MLS-C01 free questions and answers of New Version:

NEW QUESTION 1

An insurance company is developing a new device for vehicles that uses a camera to observe drivers' behavior and alert them when they appear distracted The company created approximately 10,000 training images in a controlled environment that a Machine Learning Specialist will use to train and evaluate machine learning models

During the model evaluation the Specialist notices that the training error rate diminishes faster as the number of epochs increases and the model is not accurately inferring on the unseen test images

Which of the following should be used to resolve this issue? (Select TWO)

- A. Add vanishing gradient to the model

- B. Perform data augmentation on the training data

- C. Make the neural network architecture complex.

- D. Use gradient checking in the model

- E. Add L2 regularization to the model

Answer: BD

NEW QUESTION 2

A Machine Learning Specialist is working with a large company to leverage machine learning within its products. The company wants to group its customers into categories based on which customers will and will not churn within the next 6 months. The company has labeled the data available to the Specialist.

Which machine learning model type should the Specialist use to accomplish this task?

- A. Linear regression

- B. Classification

- C. Clustering

- D. Reinforcement learning

Answer: B

Explanation:

The goal of classification is to determine to which class or category a data point (customer in our case) belongs to. For classification problems, data scientists would use historical data with predefined target variables AKA labels (churner/non-churner) – answers that need to be predicted – to train an algorithm. With classification,

businesses can answer the following questions:  Will this customer churn or not?

Will this customer churn or not? Will a customer renew their subscription?

Will a customer renew their subscription?  Will a user downgrade a pricing plan?

Will a user downgrade a pricing plan? Are there any signs of unusual customer behavior?

Are there any signs of unusual customer behavior?

NEW QUESTION 3

A Data Science team is designing a dataset repository where it will store a large amount of training data commonly used in its machine learning models. As Data Scientists may create an arbitrary number of new datasets every day the solution has to scale automatically and be cost-effective. Also, it must be possible to explore the data using SQL.

Which storage scheme is MOST adapted to this scenario?

- A. Store datasets as files in Amazon S3.

- B. Store datasets as files in an Amazon EBS volume attached to an Amazon EC2 instance.

- C. Store datasets as tables in a multi-node Amazon Redshift cluster.

- D. Store datasets as global tables in Amazon DynamoDB.

Answer: A

NEW QUESTION 4

A financial services company is building a robust serverless data lake on Amazon S3. The data lake should be flexible and meet the following requirements:

* Support querying old and new data on Amazon S3 through Amazon Athena and Amazon Redshift Spectrum.

* Support event-driven ETL pipelines.

* Provide a quick and easy way to understand metadata. Which approach meets trfese requirements?

- A. Use an AWS Glue crawler to crawl S3 data, an AWS Lambda function to trigger an AWS Glue ETL job, and an AWS Glue Data catalog to search and discover metadata.

- B. Use an AWS Glue crawler to crawl S3 data, an AWS Lambda function to trigger an AWS Batch job, and an external Apache Hive metastore to search and discover metadata.

- C. Use an AWS Glue crawler to crawl S3 data, an Amazon CloudWatch alarm to trigger an AWS Batch job, and an AWS Glue Data Catalog to search and discover metadata.

- D. Use an AWS Glue crawler to crawl S3 data, an Amazon CloudWatch alarm to trigger an AWS Glue ETL job, and an external Apache Hive metastore to search and discover metadata.

Answer: B

NEW QUESTION 5

A Machine Learning Specialist is training a model to identify the make and model of vehicles in images The Specialist wants to use transfer learning and an existing model trained on images of general objects The Specialist collated a large custom dataset of pictures containing different vehicle makes and models

- A. Initialize the model with random weights in all layers including the last fully connected layer

- B. Initialize the model with pre-trained weights in all layers and replace the last fully connected layer.

- C. Initialize the model with random weights in all layers and replace the last fully connected layer

- D. Initialize the model with pre-trained weights in all layers including the last fully connected layer

Answer: B

NEW QUESTION 6

A manufacturing company asks its Machine Learning Specialist to develop a model that classifies defective parts into one of eight defect types. The company has provided roughly 100000 images per defect type for training During the injial training of the image classification model the Specialist notices that the validation accuracy is 80%, while the training accuracy is 90% It is known that human-level performance for this type of image classification is around 90%

What should the Specialist consider to fix this issue1?

- A. A longer training time

- B. Making the network larger

- C. Using a different optimizer

- D. Using some form of regularization

Answer: D

NEW QUESTION 7

A Machine Learning Specialist is building a convolutional neural network (CNN) that will classify 10 types of animals. The Specialist has built a series of layers in a neural network that will take an input image of an animal, pass it through a series of convolutional and pooling layers, and then finally pass it through a dense and fully connected layer with 10 nodes The Specialist would like to get an output from the neural network that is a probability distribution of how likely it is that the input image belongs to each of the 10 classes

Which function will produce the desired output?

- A. Dropout

- B. Smooth L1 loss

- C. Softmax

- D. Rectified linear units (ReLU)

Answer: D

NEW QUESTION 8

A Mobile Network Operator is building an analytics platform to analyze and optimize a company's operations using Amazon Athena and Amazon S3

The source systems send data in CSV format in real lime The Data Engineering team wants to transform the data to the Apache Parquet format before storing it on Amazon S3

Which solution takes the LEAST effort to implement?

- A. Ingest .CSV data using Apache Kafka Streams on Amazon EC2 instances and use Kafka Connect S3 to serialize data as Parquet

- B. Ingest .CSV data from Amazon Kinesis Data Streams and use Amazon Glue to convert data into Parquet.

- C. Ingest .CSV data using Apache Spark Structured Streaming in an Amazon EMR cluster and use Apache Spark to convert data into Parquet.

- D. Ingest .CSV data from Amazon Kinesis Data Streams and use Amazon Kinesis Data Firehose to convert data into Parquet.

Answer: C

NEW QUESTION 9

A manufacturing company has structured and unstructured data stored in an Amazon S3 bucket A Machine Learning Specialist wants to use SQL to run queries on this data. Which solution requires the LEAST effort to be able to query this data?

- A. Use AWS Data Pipeline to transform the data and Amazon RDS to run queries.

- B. Use AWS Glue to catalogue the data and Amazon Athena to run queries

- C. Use AWS Batch to run ETL on the data and Amazon Aurora to run the quenes

- D. Use AWS Lambda to transform the data and Amazon Kinesis Data Analytics to run queries

Answer: D

NEW QUESTION 10

A gaming company has launched an online game where people can start playing for free but they need to pay if they choose to use certain features The company needs to build an automated system to predict whether or not a new user will become a paid user within 1 year The company has gathered a labeled dataset from 1 million users

The training dataset consists of 1.000 positive samples (from users who ended up paying within 1 year) and 999.1 negative samples (from users who did not use any paid features) Each data sample consists of 200 features including user age, device, location, and play patterns

Using this dataset for training, the Data Science team trained a random forest model that converged with over 99% accuracy on the training set However, the prediction results on a test dataset were not satisfactory.

Which of the following approaches should the Data Science team take to mitigate this issue? (Select TWO.)

- A. Add more deep trees to the random forest to enable the model to learn more features.

- B. indicate a copy of the samples in the test database in the training dataset

- C. Generate more positive samples by duplicating the positive samples and adding a small amount of noise to the duplicated data.

- D. Change the cost function so that false negatives have a higher impact on the cost value than false positives

- E. Change the cost function so that false positives have a higher impact on the cost value than false negatives

Answer: BD

NEW QUESTION 11

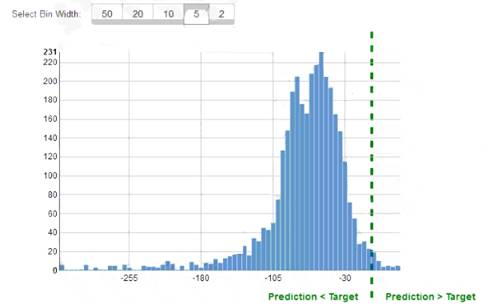

While reviewing the histogram for residuals on regression evaluation data a Machine Learning Specialist notices that the residuals do not form a zero-centered bell shape as shown What does this mean?

- A. The model might have prediction errors over a range of target values.

- B. The dataset cannot be accurately represented using the regression model

- C. There are too many variables in the model

- D. The model is predicting its target values perfectly.

Answer: D

NEW QUESTION 12

A Machine Learning Specialist needs to create a data repository to hold a large amount of time-based training data for a new model. In the source system, new files are added every hour Throughout a single 24-hour period, the volume of hourly updates will change significantly. The Specialist always wants to train on the last 24 hours of the data

Which type of data repository is the MOST cost-effective solution?

- A. An Amazon EBS-backed Amazon EC2 instance with hourly directories

- B. An Amazon RDS database with hourly table partitions

- C. An Amazon S3 data lake with hourly object prefixes

- D. An Amazon EMR cluster with hourly hive partitions on Amazon EBS volumes

Answer: C

NEW QUESTION 13

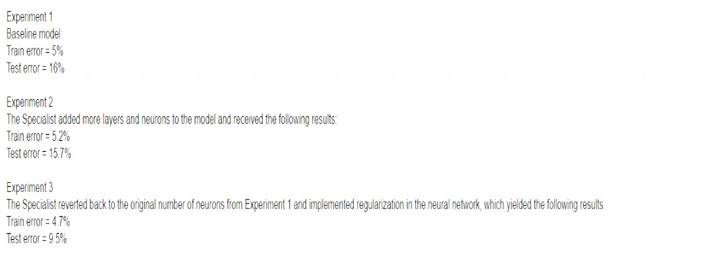

An Machine Learning Specialist discover the following statistics while experimenting on a model.

What can the Specialist from the experiments?

- A. The model In Experiment 1 had a high variance error lhat was reduced in Experiment 3 by regularization Experiment 2 shows that there is minimal bias error in Experiment 1

- B. The model in Experiment 1 had a high bias error that was reduced in Experiment 3 by regularization Experiment 2 shows that there is minimal variance error in Experiment 1

- C. The model in Experiment 1 had a high bias error and a high variance error that were reduced in Experiment 3 by regularization Experiment 2 shows thai high bias cannot be reduced by increasing layers and neurons in the model

- D. The model in Experiment 1 had a high random noise error that was reduced in Expenment 3 by regularization Expenment 2 shows that random noise cannot be reduced by increasing layers and neurons in the model

Answer: C

NEW QUESTION 14

A Machine Learning Specialist trained a regression model, but the first iteration needs optimizing. The Specialist needs to understand whether the model is more frequently overestimating or underestimating the

target.

What option can the Specialist use to determine whether it is overestimating or underestimating the target value?

- A. Root Mean Square Error (RMSE)

- B. Residual plots

- C. Area under the curve

- D. Confusion matrix

Answer: C

NEW QUESTION 15

A Machine Learning Specialist is packaging a custom ResNet model into a Docker container so the company can leverage Amazon SageMaker for training The Specialist is using Amazon EC2 P3 instances to train the model and needs to properly configure the Docker container to leverage the NVIDIA GPUs

What does the Specialist need to do1?

- A. Bundle the NVIDIA drivers with the Docker image

- B. Build the Docker container to be NVIDIA-Docker compatible

- C. Organize the Docker container's file structure to execute on GPU instances.

- D. Set the GPU flag in the Amazon SageMaker Create TrainingJob request body

Answer: A

NEW QUESTION 16

An agency collects census information within a country to determine healthcare and social program needs by province and city. The census form collects responses for approximately 500 questions from each citizen

Which combination of algorithms would provide the appropriate insights? (Select TWO )

- A. The factorization machines (FM) algorithm

- B. The Latent Dirichlet Allocation (LDA) algorithm

- C. The principal component analysis (PCA) algorithm

- D. The k-means algorithm

- E. The Random Cut Forest (RCF) algorithm

Answer: CD

Explanation:

The PCA and K-means algorithms are useful in collection of data using census form.

NEW QUESTION 17

A Machine Learning Specialist working for an online fashion company wants to build a data ingestion solution for the company's Amazon S3-based data lake.

The Specialist wants to create a set of ingestion mechanisms that will enable future capabilities comprised of:

• Real-time analytics

• Interactive analytics of historical data

• Clickstream analytics

• Product recommendations

Which services should the Specialist use?

- A. AWS Glue as the data dialog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for real-time data insights; Amazon Kinesis Data Firehose for delivery to Amazon ES for clickstream analytics; Amazon EMR to generate personalized product recommendations

- B. Amazon Athena as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for near-realtime data insights; Amazon Kinesis Data Firehose for clickstream analytics; AWS Glue to generate personalized product recommendations

- C. AWS Glue as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for historical data insights; Amazon Kinesis Data Firehose for delivery to Amazon ES for clickstream analytics; Amazon EMR to generate personalized product recommendations

- D. Amazon Athena as the data catalog; Amazon Kinesis Data Streams and Amazon Kinesis Data Analytics for historical data insights; Amazon DynamoDB streams for clickstream analytics; AWS Glue to generate personalized product recommendations

Answer: A

NEW QUESTION 18

A Machine Learning Specialist built an image classification deep learning model. However the Specialist ran into an overfitting problem in which the training and testing accuracies were 99% and 75%r respectively.

How should the Specialist address this issue and what is the reason behind it?

- A. The learning rate should be increased because the optimization process was trapped at a local minimum.

- B. The dropout rate at the flatten layer should be increased because the model is not generalized enough.

- C. The dimensionality of dense layer next to the flatten layer should be increased because the model is not complex enough.

- D. The epoch number should be increased because the optimization process was terminated before it reached the global minimum.

Answer: D

NEW QUESTION 19

Which of the following metrics should a Machine Learning Specialist generally use to compare/evaluate machine learning classification models against each other?

- A. Recall

- B. Misclassification rate

- C. Mean absolute percentage error (MAPE)

- D. Area Under the ROC Curve (AUC)

Answer: A

NEW QUESTION 20

A Machine Learning Specialist must build out a process to query a dataset on Amazon S3 using Amazon Athena The dataset contains more than 800.000 records stored as plaintext CSV files Each record contains 200 columns and is approximately 1 5 MB in size Most queries will span 5 to 10 columns only

How should the Machine Learning Specialist transform the dataset to minimize query runtime?

- A. Convert the records to Apache Parquet format

- B. Convert the records to JSON format

- C. Convert the records to GZIP CSV format

- D. Convert the records to XML format

Answer: A

NEW QUESTION 21

A Machine Learning Specialist is using Apache Spark for pre-processing training data As part of the Spark pipeline, the Specialist wants to use Amazon SageMaker for training a model and hosting it Which of the following would the Specialist do to integrate the Spark application with SageMaker? (Select THREE )

- A. Download the AWS SDK for the Spark environment

- B. Install the SageMaker Spark library in the Spark environment.

- C. Use the appropriate estimator from the SageMaker Spark Library to train a model.

- D. Compress the training data into a ZIP file and upload it to a pre-defined Amazon S3 bucket.

- E. Use the sageMakerMode

- F. transform method to get inferences from the model hosted in SageMaker

- G. Convert the DataFrame object to a CSV file, and use the CSV file as input for obtaining inferences from SageMaker.

Answer: DEF

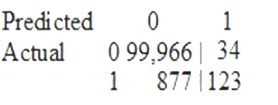

NEW QUESTION 22

A Data Scientist is developing a machine learning model to classify whether a financial transaction is fraudulent. The labeled data available for training consists of 100,000 non-fraudulent observations and 1,000 fraudulent observations.

The Data Scientist applies the XGBoost algorithm to the data, resulting in the following confusion matrix when the trained model is applied to a previously unseen validation dataset. The accuracy of the model is 99.1%, but the Data Scientist has been asked to reduce the number of false negatives.

Which combination of steps should the Data Scientist take to reduce the number of false positive predictions by the model? (Select TWO.)

- A. Change the XGBoost eval_metric parameter to optimize based on rmse instead of error.

- B. Increase the XGBoost scale_pos_weight parameter to adjust the balance of positive and negative weights.

- C. Increase the XGBoost max_depth parameter because the model is currently underfitting the data.

- D. Change the XGBoost evaljnetric parameter to optimize based on AUC instead of error.

- E. Decrease the XGBoost max_depth parameter because the model is currently overfitting the data.

Answer: DE

NEW QUESTION 23

......

Thanks for reading the newest MLS-C01 exam dumps! We recommend you to try the PREMIUM Downloadfreepdf.net MLS-C01 dumps in VCE and PDF here: https://www.downloadfreepdf.net/MLS-C01-pdf-download.html (105 Q&As Dumps)