Ucertify SAA-C03 Questions are updated and all SAA-C03 answers are verified by experts. Once you have completely prepared with our SAA-C03 exam prep kits you will be ready for the real SAA-C03 exam without a problem. We have Avant-garde Amazon-Web-Services SAA-C03 dumps study guide. PASSED SAA-C03 First attempt! Here What I Did.

Online SAA-C03 free questions and answers of New Version:

NEW QUESTION 1

A company uses Amazon EC2 instances to host its internal systems As pan of a deployment operation, an administrator tries to use the AWS CLI to terminate an EC2 instance However, the administrator receives a 403 (Access Dented) error message

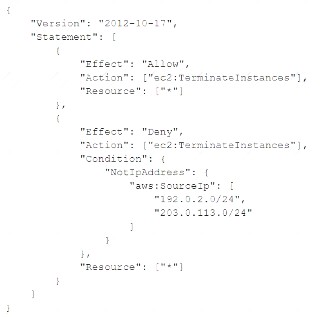

The administrator is using an IAM role that has the following 1AM policy attached:

What is the cause of the unsuccessful request?

- A. The EC2 Instance has a resource-based policy win a Deny statement.B The principal has not been specified in the policy statement

- B. The "Action" field does not grant the actions that are required to terminate the EC2 instance

- C. The request to terminate the EC2 instance does not originate from the CIDR blocks 192 0 2.0:24 or 203.0.113.0/24.

Answer: B

NEW QUESTION 2

A company is launching a new application and will display application metrics on an Amazon CloudWatch dashboard. The company’s product manager needs to access this dashboard periodically. The product manager does not have an AWS account. A solution architect must provide access to the product manager by following the principle of least privilege.

Which solution will meet these requirements?

- A. Share the dashboard from the CloudWatch consol

- B. Enter the product manager’s email address, and complete the sharing step

- C. Provide a shareable link for the dashboard to the product manager.

- D. Create an IAM user specifically for the product manage

- E. Attach the CloudWatch Read Only Access managed policy to the use

- F. Share the new login credential with the product manage

- G. Share the browser URL of the correct dashboard with the product manager.

- H. Create an IAM user for the company’s employees, Attach the View Only Access AWS managed policy to the IAM use

- I. Share the new login credentials with the product manage

- J. Ask the product manager to navigate to the CloudWatch console and locate the dashboard by name in the Dashboards section.

- K. Deploy a bastion server in a public subne

- L. When the product manager requires access to the dashboard, start the server and share the RDP credential

- M. On the bastion server, ensure that the browser is configured to open the dashboard URL with cached AWS credentials that have appropriate permissions to view the dashboard.

Answer: A

NEW QUESTION 3

A solutions architect is creating a new VPC design. There are two public subnets for the load balancer, two private subnets for web servers, and two private subnets for MySQL. The web servers use only HTTPS. The solutions architect has already created a security group for the load balancer allowing port 443 from 0.0.0.0/0.

Company policy requires that each resource has the least access required to still be able to perform its tasks. Which additional configuration strategy should the solutions architect use to meet these requirements?

- A. Create a security group for the web servers and allow port 443 from 0.0.0.0/0. Create a security group (or the MySQL servers and allow port 3306 from the web servers security group.

- B. Create a network ACL for the web servers and allow port 443 from 0.0.0.0/0. Create a network ACL for the MySQL servers and allow port 3306 from the web servers security group.

- C. Create a security group for the web servers and allow port 443 from the load balance

- D. Create a security group for the MySQL servers and allow port 3306 from the web servers security group.

- E. Create a network ACL for the web servers and allow port 443 from the load balance

- F. Create a network ACL for the MySQL servers and allow port 3306 from the web servers security group.

Answer: C

NEW QUESTION 4

A company needs to retain application logs files for a critical application for 10 years. The application team regularly accesses logs from the past month for troubleshooting, but logs older than 1 month are rarely accessed. The application generates more than 10 TB of logs per month.

Which storage option meets these requirements MOST cost-effectively?

- A. Store the Iogs in Amazon S3 Use AWS Backup lo move logs more than 1 month old to S3 Glacier Deep Archive

- B. Store the logs in Amazon S3 Use S3 Lifecycle policies to move logs more than 1 month old to S3 Glacier Deep Archive

- C. Store the logs in Amazon CloudWatch Logs Use AWS Backup to move logs more then 1 month old to S3 Glacier Deep Archive

- D. Store the logs in Amazon CloudWatch Logs Use Amazon S3 Lifecycle policies to move logs more than 1 month old to S3 Glacier Deep Archive

Answer: B

NEW QUESTION 5

A company hosts a containerized web application on a fleet of on-premises servers that process incoming requests. The number of requests is growing quickly. The on-premises servers cannot handle the increased number of requests. The company wants to move the application to AWS with minimum code changes and minimum development effort.

Which solution will meet these requirements with the LEAST operational overhead?

- A. Use AWS Fargate on Amazon Elastic Container Service (Amazon ECS) to run the containerized web application with Service Auto Scalin

- B. Use an Application Load Balancer to distribute the incoming requests.

- C. Use two Amazon EC2 instances to host the containerized web applicatio

- D. Use an Application Load Balancer to distribute the incoming requests

- E. Use AWS Lambda with a new code that uses one of the supported language

- F. Create multiple Lambda functions to support the loa

- G. Use Amazon API Gateway as an entry point to the Lambda functions.

- H. Use a high performance computing (HPC) solution such as AWS ParallelClusterto establish an HPC cluster that can process the incoming requests at the appropriate scale.

Answer: A

NEW QUESTION 6

A solutions architect is designing the cloud architecture for a new application being deployed on AWS. The process should run in parallel while adding and removing application nodes as needed based on the number of fobs to be processed. The processor application is stateless. The solutions architect must ensure that the application is loosely copied and the job items are durably stored

Which design should the solutions architect use?

- A. Create an Amazon SNS topic to send the jobs that need to be processed Create an Amazon Machine Image (AMI) that consists of the processor application Create a launch configuration that uses the AMI Create an Auto Scaling group using the launch configuration Set the scaling policy for the Auto Scaling group to add and remove nodes based on CPU usage

- B. Create an Amazon SQS queue to hold the jobs that need to be processed Create an Amazon Machine image (AMI) that consists of the processor application Create a launch configuration that uses the AM' Create an Auto Scaling group using the launch configuration Set the scaling policy for the Auto Scaling group to add and remove nodes based on network usage

- C. Create an Amazon SQS queue to hold the jobs that needs to be processed Create an Amazon Machine image (AMI) that consists of the processor application Create a launch template that uses the AMI Create an Auto Scaling group using the launch template Set the scaling policy for the Auto Scaling group to add and remove nodes based on the number of items in the SQS queue

- D. Create an Amazon SNS topic to send the jobs that need to be processed Create an Amazon Machine Image (AMI) that consists of the processor application Create a launch template that uses the AMI Create an Auto Scaling group using the launch template Set the scaling policy for the Auto Scaling group to add and remove nodes based on the number of messages published to the SNS topic

Answer: C

Explanation:

"Create an Amazon SQS queue to hold the jobs that needs to be processed. Create an Amazon EC2 Auto Scaling group for the compute application. Set the scaling policy for the Auto Scaling group to add and remove nodes based on the number of items in the SQS queue"

In this case we need to find a durable and loosely coupled solution for storing jobs. Amazon SQS is ideal for this use case and can be configured to use dynamic scaling based on the number of jobs waiting in the queue.To configure this scaling you can use the backlog per instance metric with the target value being the acceptable backlog per instance to maintain. You can calculate these numbers as follows: Backlog per instance: To calculate your backlog per instance, start with the ApproximateNumberOfMessages queue attribute to determine the length of the SQS queue

NEW QUESTION 7

A development team runs monthly resource-intensive tests on its general purpose Amazon RDS for MySQL DB instance with Performance Insights enabled. The testing lasts for 48 hours once a month and is the only process that uses the database. The team wants to reduce the cost of running the tests without reducing the compute and memory attributes of the DB instance.

Which solution meets these requirements MOST cost-effectively?

- A. Stop the DB instance when tests are complete

- B. Restart the DB instance when required.

- C. Use an Auto Scaling policy with the DB instance to automatically scale when tests are completed.

- D. Create a snapshot when tests are complete

- E. Terminate the DB instance and restore the snapshot when required.

- F. Modify the DB instance to a low-capacity instance when tests are complete

- G. Modify the DB instance again when required.

Answer: C

NEW QUESTION 8

A company hosts an application on multiple Amazon EC2 instances The application processes messages from an Amazon SQS queue writes to an Amazon RDS table and deletes the message from the queue Occasional duplicate records are found in the RDS table. The SQS queue does not contain any duplicate messages.

What should a solutions architect do to ensure messages are being processed once only?

- A. Use the CreateQueue API call to create a new queue

- B. Use the Add Permission API call to add appropriate permissions

- C. Use the ReceiveMessage API call to set an appropriate wail time

- D. Use the ChangeMessageVisibility APi call to increase the visibility timeout

Answer: D

Explanation:

Explanation

The visibility timeout begins when Amazon SQS returns a message. During this time, the consumer processes and deletes the message. However, if the consumer fails before deleting the message and your system doesn't call the DeleteMessage action for that message before the visibility timeout expires, the message becomes visible to other consumers and the message is received again. If a message must be received only once, your consumer should delete it within the duration of the visibility timeout. https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-visibility-timeout.html

Keyword: SQS queue writes to an Amazon RDS From this, Option D best suite & other Options ruled out [Option A - You can't intruduce one more Queue in the existing one; Option B - only Permission & Option C - Only Retrieves Messages] FIF O queues are designed to never introduce duplicate messages. However, your message producer might introduce duplicates in certain scenarios: for example, if the producer sends a message, does not receive a response, and then resends the same message. Amazon SQS APIs provide deduplication functionality that prevents your message producer from sending duplicates. Any duplicates introduced by the message producer are removed within a 5-minute deduplication interval. For standard queues, you might occasionally receive a duplicate copy of a message (at-least- once delivery). If you use a standard queue, you must design your applications to be idempotent (that is, they must not be affected adversely when processing the same message more than once).

NEW QUESTION 9

A company wants to migrate its on-premises application to AWS. The application produces output files that vary in size from tens of gigabytes to hundreds of terabytes The application data must be stored in a standard file system structure

The company wants a solution that scales automatically, is highly available, and requires minimum operational overhead.

Which solution will meet these requirements?

- A. Migrate the application to run as containers on Amazon Elastic Container Service (Amazon ECS) Use Amazon S3 for storage

- B. Migrate the application to run as containers on Amazon Elastic Kubernetes Service (Amazon EKS) Use Amazon Elastic Block Store (Amazon EBS) for storage

- C. Migrate the application to Amazon EC2 instances in a Multi-AZ Auto Scaling grou

- D. Use Amazon Elastic File System (Amazon EFS) for storage.

- E. Migrate the application to Amazon EC2 instances in a Multi-AZ Auto Scaling grou

- F. Use Amazon Elastic Block Store (Amazon EBS) for storage.

Answer: C

NEW QUESTION 10

A solutions architect is tasked with transferring 750 TB of data from a network-attached file system located at a branch office to Amazon S3 Glacier The solution must avoid saturating the branch office's tow-bandwidth internet connection

What is the MOST cost-effective solution?

- A. Create a site-to-site VPN tunnel to an Amazon S3 bucket and transfer the files directl

- B. Create a bucket policy to enforce a VPC endpoint

- C. Order 10 AWS Snowball appliances and select an S3 Glacier vault as the destinatio

- D. Create a bucket policy to enforce a VPC endpoint

- E. Mount the network-attached file system to Amazon S3 and copy the files directl

- F. Create a lifecycle policy to transition the S3 objects to Amazon S3 Glacier

- G. Order 10 AWS Snowball appliances and select an Amazon S3 bucket as the destinatio

- H. Create a lifecycle policy to transition the S3 objects to Amazon S3 Glacier

Answer: D

NEW QUESTION 11

A company's ecommerce website has unpredictable traffic and uses AWS Lambda functions to directly access a private Amazon RDS for PostgreSQL DB instance. The company wants to maintain predictable database performance and ensure that the Lambda invocations do not overload the database with too many connections.

What should a solutions architect do to meet these requirements?

- A. Point the client driver at an RDS custom endpoint Deploy the Lambda functions inside a VPC

- B. Point the client driver at an RDS proxy endpoint Deploy the Lambda functions inside a VPC

- C. Point the client driver at an RDS custom endpoint Deploy the Lambda functions outside a VPC

- D. Point the client driver at an RDS proxy endpoint Deploy the Lambda functions outside a VPC

Answer: B

NEW QUESTION 12

A company runs an on-premises application that is powered by a MySQL database The company is migrating the application to AWS to Increase the application's elasticity and availability

The current architecture shows heavy read activity on the database during times of normal operation Every 4 hours the company's development team pulls a full export of the production database to populate a database in the staging environment During this period, users experience unacceptable application latency The development team is unable to use the staging environment until the procedure completes

A solutions architect must recommend replacement architecture that alleviates the application latency issue The replacement architecture also must give the development team the ability to continue using the staging environment without delay

Which solution meets these requirements?

- A. Use Amazon Aurora MySQL with Multi-AZ Aurora Replicas for productio

- B. Populate the staging database by implementing a backup and restore process that uses the mysqldump utility.

- C. Use Amazon Aurora MySQL with Multi-AZ Aurora Replicas for production Use database cloning to create the staging database on-demand

- D. Use Amazon RDS for MySQL with a Mufti AZ deployment and read replicas for production Use the standby instance tor the staging database.

- E. Use Amazon RDS for MySQL with a Multi-AZ deployment and read replicas for productio

- F. Populate the staging database by implementing a backup and restore process that uses the mysqldump utility.

Answer: B

NEW QUESTION 13

A company uses a popular content management system (CMS) for its corporate website. However, the required patching and maintenance are burdensome. The company is redesigning its website and wants anew solution. The website will be updated four times a year and does not need to have any dynamic content available. The solution must provide high scalability and enhanced security.

Which combination of changes will meet these requirements with the LEAST operational overhead? (Choose two.)

- A. Deploy an AWS WAF web ACL in front of the website to provide HTTPS functionality

- B. Create and deploy an AWS Lambda function to manage and serve the website content

- C. Create the new website and an Amazon S3 bucket Deploy the website on the S3 bucket with static website hosting enabled

- D. Create the new websit

- E. Deploy the website by using an Auto Scaling group of Amazon EC2 instances behind an Application Load Balancer.

Answer: AD

NEW QUESTION 14

An online retail company has more than 50 million active customers and receives more than 25,000 orders each day. The company collects purchase data for customers and stores this data in Amazon S3. Additional customer data is stored in Amazon RDS.

The company wants to make all the data available to various teams so that the teams can perform analytics. The solution must provide the ability to manage fine-grained permissions for the data and must minimize operational overhead.

Which solution will meet these requirements?

- A. Migrate the purchase data to write directly to Amazon RD

- B. Use RDS access controls to limit access.

- C. Schedule an AWS Lambda function to periodically copy data from Amazon RDS to Amazon S3. Create an AWS Glue crawle

- D. Use Amazon Athena to query the dat

- E. Use S3 policies to limit access.

- F. Create a data lake by using AWS Lake Formatio

- G. Create an AWS Glue JOBC connection to Amazon RD

- H. Register the S3 bucket in Lake Formatio

- I. Use Lake

- J. Formation access controls to limit acces

- K. Create an Amazon Redshift cluster Schedule an AWS Lambda function to periodically copy data from Amazon S3 and Amazon RDS to Amazon Redshif

- L. Use Amazon Redshift access controls to limit access.

Answer: C

NEW QUESTION 15

A company is migrating its on-premises PostgreSQL database to Amazon Aurora PostgreSQL. The

on-premises database must remain online and accessible during the migration. The Aurora database must remain synchronized with the on-premises database.

Which combination of actions must a solutions architect take to meet these requirements? (Select TWO.)

- A. Create an ongoing replication task.

- B. Create a database backup of the on-premises database

- C. Create an AWS Database Migration Service (AWS DMS) replication server

- D. Convert the database schema by using the AWS Schema Conversion Tool (AWS SCT).

- E. Create an Amazon EventBridge (Amazon CloudWatch Events) rule to monitor the database synchronization

Answer: CD

NEW QUESTION 16

A business's backup data totals 700 terabytes (TB) and is kept in network attached storage (NAS) at its data center. This backup data must be available in the event of occasional regulatory inquiries and preserved for a period of seven years. The organization has chosen to relocate its backup data from its on-premises data center to Amazon Web Services (AWS). Within one month, the migration must be completed. The company's public internet connection provides 500 Mbps of dedicated capacity for data transport.

What should a solutions architect do to ensure that data is migrated and stored at the LOWEST possible cost?

- A. Order AWS Snowball devices to transfer the dat

- B. Use a lifecycle policy to transition the files to Amazon S3 Glacier Deep Archive.

- C. Deploy a VPN connection between the data center and Amazon VP

- D. Use the AWS CLI to copy the data from on premises to Amazon S3 Glacier.

- E. Provision a 500 Mbps AWS Direct Connect connection and transfer the data to Amazon S3. Use a lifecycle policy to transition the files to Amazon S3 Glacier Deep Archive.

- F. Use AWS DataSync to transfer the data and deploy a DataSync agent on premise

- G. Use the DataSync task to copy files from the on-premises NAS storage to Amazon S3 Glacier.

Answer: A

NEW QUESTION 17

A company hosts a serverless application on AWS. The application uses Amazon API Gateway. AWS Lambda, and an Amazon RDS for PostgreSQL database. The company notices an increase in application errors that result from database connection timeouts during times of peak traffic or unpredictable traffic. The company needs a solution that reduces the application failures with the least amount of change to the code.

What should a solutions architect do to meet these requirements?

- A. Reduce the Lambda concurrency rate.

- B. Enable RDS Proxy on the RDS DB instance.

- C. Resize the ROS DB instance class to accept more connections.

- D. Migrate the database to Amazon DynamoDB with on-demand scaling

Answer: B

NEW QUESTION 18

......

Thanks for reading the newest SAA-C03 exam dumps! We recommend you to try the PREMIUM Certleader SAA-C03 dumps in VCE and PDF here: https://www.certleader.com/SAA-C03-dumps.html (0 Q&As Dumps)